The cost of generative AI can seem daunting. Many imagine powerful algorithms that carry steep bills. Yet AWS generative AI services offer flexible pricing to fit different needs. In this guide, we will break down generative AI pricing. You will discover how to manage costs, see real use cases, and learn about generative AI cost factors. We will also highlight AWS generative AI pricing structures you can adapt. With these insights, you will be better prepared to unlock AI benefits while controlling spend.

AWS (Amazon Web Services) and its role in the AI space

Amazon Web Services is known for its vast cloud offerings. It covers everything from basic compute and storage to special tools for model training. This range allows organizations to scale in a cost-friendly way. You pay only for the resources you use, which can lower overhead. This approach shapes modern application design and speeds up data-driven innovation.

AWS invests heavily in artificial intelligence. Its goal is to give businesses of all sizes access to these powerful solutions. You can build anything from a simple chatbot to a complex system for advanced performance analytics. AWS provides ready-made services that let you skip building everything from scratch. This commitment helps businesses adopt AI without needing giant in-house teams.

The AWS ecosystem spans industries like retail, e-commerce, healthcare, finance, and more. This broad support makes AWS a key player in generative AI. You can mix and match services, like SageMaker, Bedrock, and Comprehend. Each tool plays a role in your AI pipeline, from data preprocessing to final inference. With this seamless framework, you can create custom solutions that solve real-world challenges.

What is AWS Generative AI?

Generative AI refers to AI models that create new text, code, images, or other content. Unlike basic classification tasks, these model architectures focus on generating outputs. AWS provides an easy path to harness this technology. For example, a user can build an AI-driven content engine. This engine could create scripts for customer support or even craft marketing copy. AWS’s infrastructure makes scaling such ideas simpler.

Why does generative AI matter? In an era of personalization and engagement, AI-driven content can transform user experiences. Automated text, generated images, or advanced dialogue systems can set your brand apart. AWS underpins this with powerful and secure building blocks. Their solutions handle your data with enterprise-grade compliance. This gives you the confidence to explore new ways to serve your customers.

Below are examples of AWS generative AI use cases:

- Marketing content creation: AI writes social posts, blog excerpts, or product listings.

- Data analysis summaries: compress large datasets into short summaries to guide decisions.

- Creative brainstorming: artists generate concept art or style references in minutes.

- Internal task automation: write code snippets, memos, or briefs, freeing staff for high-level work.

These examples show the value of performance-driven generative AI across many fields.

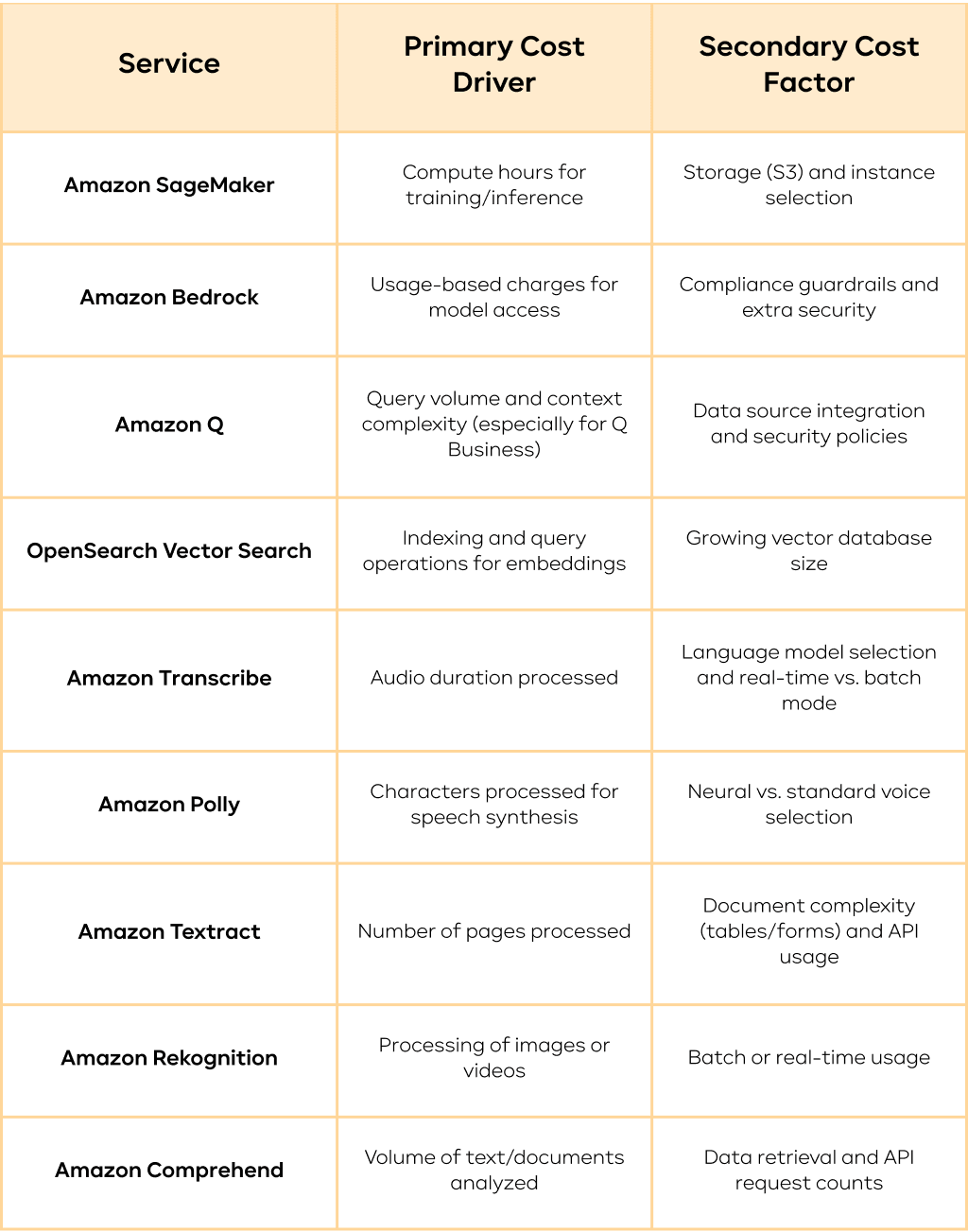

Key AWS Generative AI services

AWS provides many services for factors like training, inference, data handling, and more. Before moving to the cost of generative AI, let’s review AWS’s major offerings. Each of them is tailored for different tasks.

Amazon SageMaker

Amazon SageMaker is a managed service for building, training, and deploying AI models. It frees you from handling servers yourself.

Key features are:

- SageMaker Studio: an integrated development environment (IDE) for ML.

- Optimized built-in algorithms and pretrained models.

- Scalability: spin up or down based on workloads.

- Fine-tuning: adjust pretrained models with custom prompt engineering.

- Deployment options: real-time inference endpoints, batch transform, SageMaker Asynchronous Inference.

- SageMaker JumpStart: prebuilt solutions, models, and example notebooks.

- Model Hosting & MLOps

- Cost optimization features: managed spot training, multi-model endpoints, Serverless Inference.

- Integration: connect with S3 for data storage and CloudWatch for resource monitoring.

Imagine a retailer that wants more relevant product suggestions. SageMaker will help them fine-tune a model that tailors recommendations. The service will then handle deployment and autoscaling. Data scientists will track results in real time and fine-tune further for the best outcomes.

Amazon Bedrock

Amazon Bedrock focuses on managing foundation model access. It makes large pretrained models easy to integrate into your application.

You can pick from specialized models that handle text generation or translation, among other tasks. Bedrock then streamlines the workflow, so you do not juggle multiple libraries or frameworks.

Its key features are:

- Access to foundation models (FMs) via API

- Serverless and fully managed

- Model customization (fine-tuning & RAG)

- Enterprise-grade security and compliance

- Agent capabilities for orchestration

For example, take an HR team that wants automated drafts for job postings. Amazon Bedrock can provide such a solution. Using it, the team can select a text-generation model that handles multiple languages. They can also set security guardrails to protect sensitive applicant data.

Amazon Q

Amazon Q is for conversational AI. It works well for chatbots or voice-based services.

The main features of Amazon Q are:

- AI assistant for developers

- Infrastructure setup with Q Developer

- Contextual assistance in AWS Console

- Q for business users (Amazon Q Business)

- Enterprise security and access controls

- Use cases across roles

- Dialog management across multi-turn chats.

- Simple integration with CRMs or knowledge bases in real time.

- Usage in internal help desks or external customer support.

Imagine you are a telecom brand that wants to reduce incoming support calls. With Amazon Q, you can create a virtual agent. It will handle billing queries and account details, cutting the load on call centers.

OpenSearch Vector Search

OpenSearch Vector Search supports semantic search. It uses vector embeddings to find content by meaning, not just keywords.

Key features:

- Native vector indexing

- Semantic search: finds related items even if they do not share exact terms.

- Fast and scalable: manages high query volumes.

- Hybrid search support

- Flexible indexing and document structure

- Integration with RAG architectures

- Security and access control

- Integration with S3 or AWS Lambda for advanced analytics on unstructured data

Consider a media platform that hosts user videos and articles. They want to give users better results and adopt vector search for that. When someone looks for “easy cooking tips,” the search also suggests relevant meal-prep advice or cooking hacks.

Amazon Transcribe

Amazon Transcribe turns speech into text. You can use it for meetings, podcasts, or live events.

Its main features are:

- Real-time and batch transcription

- Multilingual Support

- Custom Vocabulary & Language Models

- Speaker Identification

- Timestamp Generation for words

- Audio channel identification

- Secure and Compliant

- Content Redaction & Language Filtering

- Easy integration with S3, AWS Lambda, Amazon Comprehend, and Bedrock

Amazon Transcribe is widely adopted in closed captioning. It enhances accessibility for video or live streams. The tool is also used in contact centers to track calls for compliance and quality control. In legal workflows, it transcribes audio evidence for easier reviews.

Amazon Polly

Amazon Polly converts text into lifelike speech. It benefits apps that serve users who prefer or require audio.

Its features are:

- Lifelike Speech with Neural TTS (NTTS)

- Wide support of languages, accents, and voices

- Brand voice (custom neural voice)

- Speech Synthesis Markup Language Support for voice dynamics and emotionality

- Real-Time and Batch Synthesis

- Custom Lexicons

- Various output formats (MP3, OGG, PCM)

- Integration with S3, AWS Lambda, API Gateway, Amazon Lex, and Amazon Bedrock Agents

- Security and cost control

Amazon Polly is widely used in e-learning, media, healthcare, gaming, and accessibility tech.

Amazon Textract

Textract extracts text and structure from files. It reads forms, tables, and other elements, going beyond basic OCR.

This service has the following features:

- Intelligent text extraction (from PDFs, images, scanned documents).

- Form and key-value pair extraction

- Table detection

- Handwriting recognition

- Security and compliance

- Query-based extraction (Textract Queries)

- Preprocessing for AI pipelines

- Integration and automation

Amazon Textract offers workflow automation and scalability. It speeds up data entry tasks and handles thousands of documents in short timeframes. The tool is applied in finance, healthcare, insurance, legal, and the public sector.

Amazon Rekognition

Rekognition is used for image and video analysis. It finds objects, faces, and scenes in media. This service is vital for moderation or advanced tagging.

Its key features are:

- Object and scene detection

- Face detection and analysis within compliance limits

- Face comparison and recognition

- Video analysis

- Unsafe and Inappropriate Content Detection

- Custom content labels with minimal setup

- Security & Compliance

- Easy Integration with AWS Lambda, S3, Step Functions, and EventBridge.

Amazon Rekognition is especially valuable for retail, media, public safety, fintech, and healthcare.

Amazon Comprehend

Comprehend performs sentiment analysis, entity recognition, and topic modeling. It unlocks text-based insights for your business.

The main features of this service are:

- Named Entity Recognition (people, organizations, dates, locations, quantities, etc.)

- Sentiment analysis (positive, negative, neutral, or mixed)

- Key phrase extraction

- Custom classification and entity recognition

- Syntax analysis

- Language detection

- Semantic relationship detection

- Medical language processing (Amazon Comprehend Medical)

- Security and integration with S3, Lambda, Textract, SageMaker, and Step Functions

Amazon Comprehend helps businesses receive market insights, analyze tickets, and monitor brands. Its NLP capabilities are often used in finance, healthcare, legal, customer support, and retail

Below, you can review the main AWS generative AI services and their primary cost drivers.

_______________________________________________________________________

Want professional consultation on AWS generative AI cost management?

We guide companies to high-quality AI solutions and implement them. Receive expected results while controlling expenses with our AWS generative AI services.

_______________________________________________________________________

Pricing models for AWS Generative AI

AWS pricing is not one-size-fits-all. You can choose from varied structures based on usage, characters processed, or subscription tiers. This determines the cost of generative AI you receive in the end.

Pay-as-you-go pricing

With the pay-as-you-go pricing model, you get billed only for what you use. It is perfect for new or unpredictable workloads.

Key benefits:

- Flexible: align spend with real-world needs.

- Predictable billing correlation: usage scales with cost.

- Low entry barrier: no large upfront expenses.

- Transparency and granular billing.

Despite its positive sides, this model has some limitations:

- Unpredictable cost of generative AI at scale if not monitored

- No built-in discounts

- Cost inefficiencies for steady, high-throughput use

- Need for usage monitoring and cost optimization

- Limited predictability for token-based services

Pay-as-you-go pricing makes sense for early-stage GenAI projects, event-driven or on-demand AI features, multitenant SaaS platforms with unpredictable user load, and as a supplement for existing workloads with GenAI features.

Free tier

AWS offers a free tier on many services for small-scale tests. You can try them before fully committing.

In addition to zero-cost exploration, there are a few more benefits of this tier:

- Safe environment for experimentation

- End-to-end GenAI pipelines with minimal cost if volumes stay within limits

- Eligibility for custom pricing/credits

There are, however, limitations to know about:

- Only certain amounts of time or requests are free.

- Not all AI services are available under the free tier.

- Limited Performance Options due to the smaller instance types applied.

- No SLA or priority support.

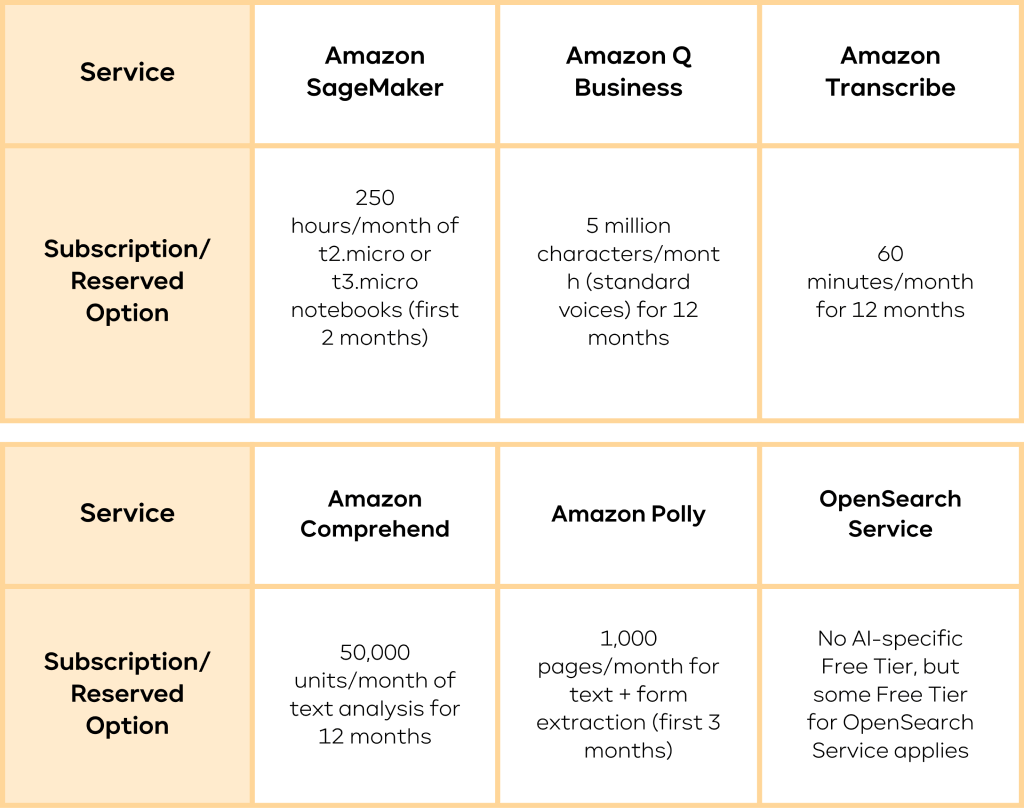

Example of free tier limits as of 2025:

| Service | Free Tier Allocation |

| Amazon SageMaker | 250 hours/month of t2.micro or t3.micro notebooks (first 2 months) |

| Amazon Polly | 5 million characters/month (standard voices) for 12 months |

| Amazon Transcribe | 60 minutes/month for 12 months |

| Amazon Comprehend | 50,000 units/month of text analysis for 12 months |

| Amazon Textract | 1,000 pages/month for text + form extraction (first 3 months) |

| OpenSearch | No AI-specific Free Tier, but some Free Tier for OpenSearch Service applies |

| Amazon Bedrock | Trial model access credits available (e.g., $20–$300 depending on account and usage) |

| Amazon Q | Not yet part of the Free Tier (subject to future change) |

Note: Always check AWS Free Tier documentation for updates by region and service.

The free tier is applicable when you want to try a service at no cost, experiment, make a prototype, or compare AWS with other cloud providers.

Subscription-based pricing

Some services come with a flat monthly or annual fee for consistent workloads. This is what the subscription-based cost of generative AI is.

It offers a few great benefits for businesses that commit:

- Lower unit costs at scale

- Budget predictability

- Access to enterprise-only features

- Reserved capacity

This pricing model has some limitations, too:

- Less flexibility: you pay no matter what the actual use is

- Commitment risk for rapidly changing workloads

- Complex setup or approval for some features

- Not widely available among AWS GenAI services

Look at the subscription examples for some AWS generative AI services in the table below:

Subscription is ideal if you have predictable workloads or know your monthly or yearly spend.

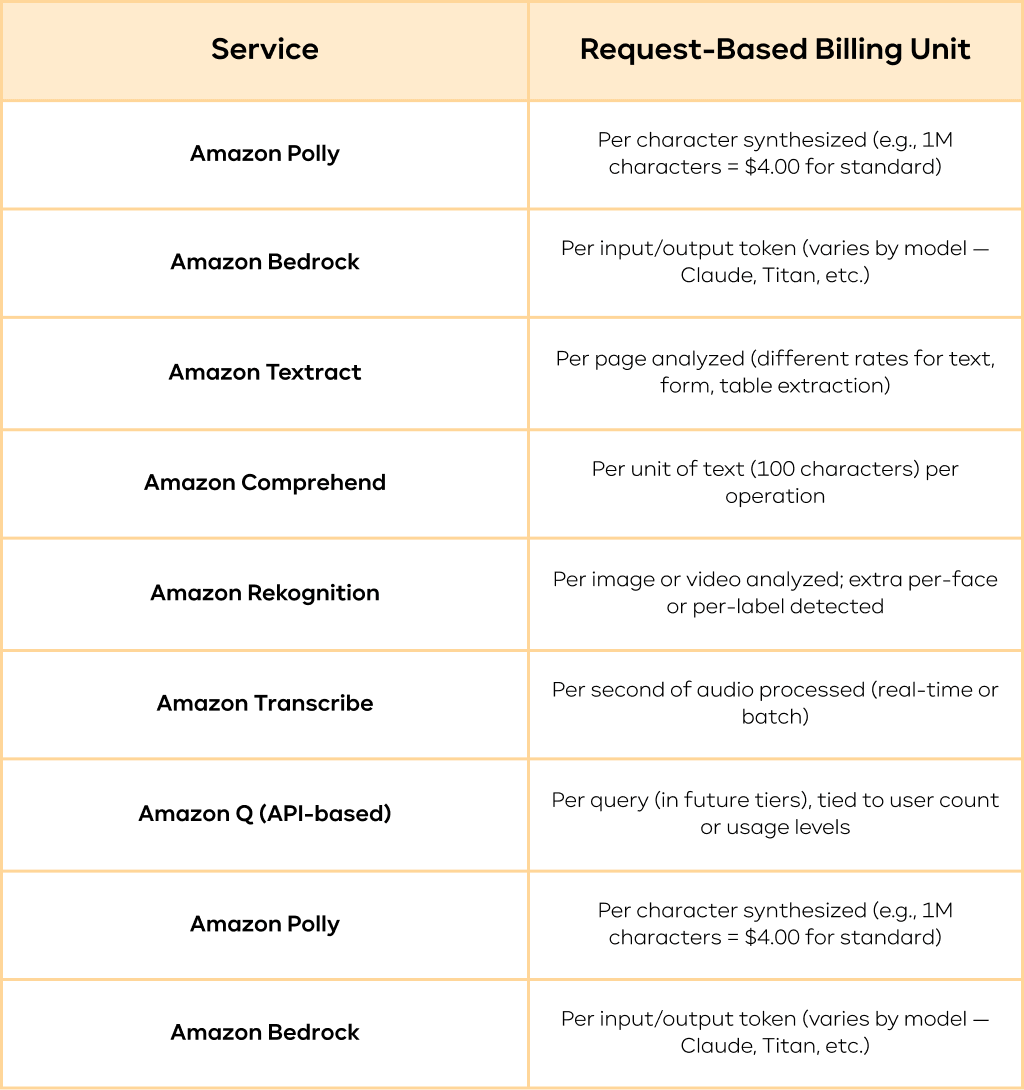

Request-based pricing

Many AI services charge per request or API call. The pricing is based on input volume, type, or complexity. These can be images, videos, documents, text characters, tokens, data units, etc.

The advantages of this pricing model are:

- Simplicity and transparency: clear link between calls and costs.

- Fine-grained cost control: you pay for each processed item.

- Automatic scaling

- Ideal for stateless workflows

Let’s also look at the disadvantages it has:

- High cost of generative AI at scale if throughput is high

- Input complexity increases the cost of generative AI

- Harder to predict in variable workloads

- Limited discounts or commitments

The best situation to apply request-based pricing is when your usage is irregular or event-driven.

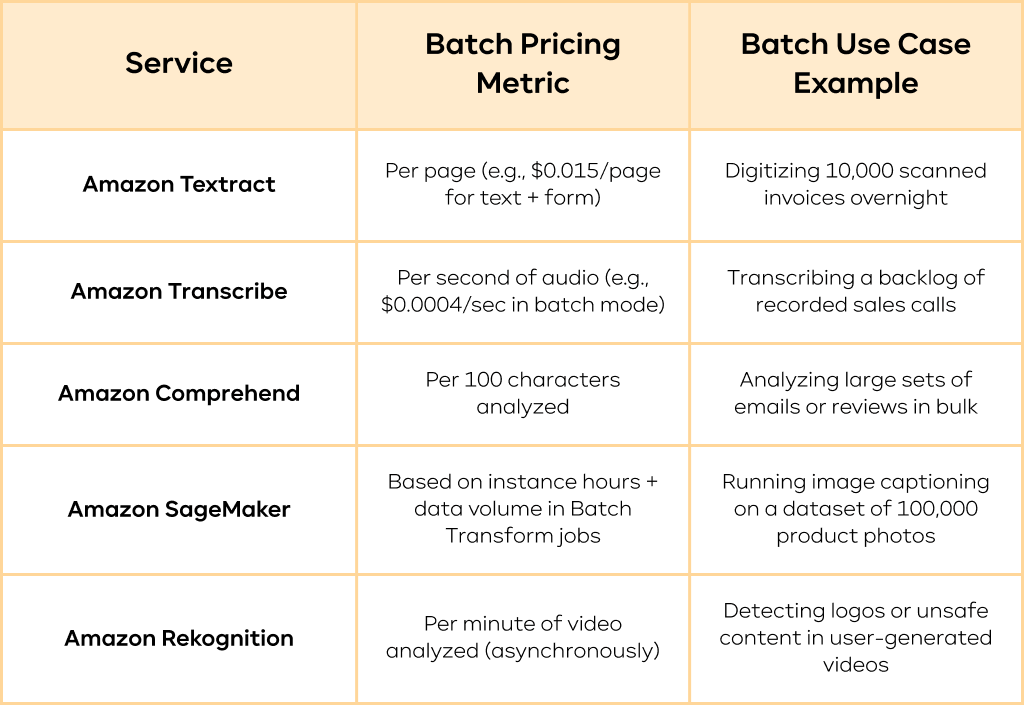

Batch pricing

Batch pricing can be applied if you need to process large volumes of data in bulk asynchronous jobs. In this case, you pay for the data volume, but the price per unit is lower.

This model has certain benefits and drawbacks. The advantages are:

- Cost efficiency for large volumes

- Simplified orchestration

- Automatic scalability via parallelization and throughput optimization

- Ideal for non-real-time workloads

The limitations of batch pricing are:

- No real-time results, as processing may take from minutes to hours.

- Limited interactivity – you submit a job and wait.

- AWS resources may have quotas and limits (file size, job duration, etc.)

- Complex handling of outputs

Batch pricing is a perfect choice for large datasets that don’t require immediate responses or results.

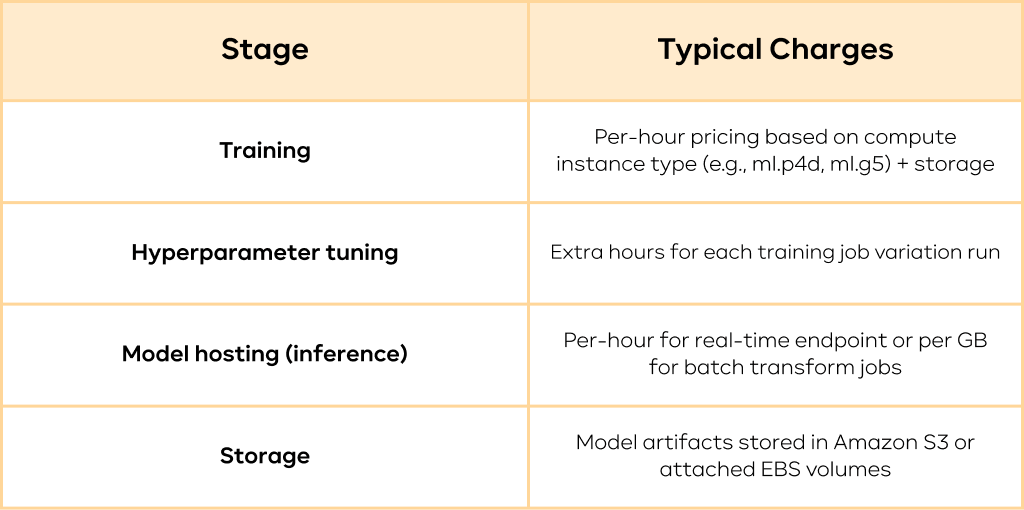

Model training and deployment costs

AWS charges separately for model training or fine-tuning and for hosting it. Training a model can require GPU time, storage for training data, and network transfer. This can add up, so plan wisely.

These costs are applied to SageMaker, Bedrock Custom Models, Comprehend Custom, and Polly Brand Voice.

For example, training a large language model using a ml.p4d.A 24xlarge instance may cost $32–$40/hour, based on the region.

Customization (fine-tuning) pricing

Fine-tuning large models can incur an extra cost of generative AI. You need specific compute instances for extended training sessions.

Please, mind the cost considerations for fine-tuning large language models:

- Extended training: GPUs or TPUs can be pricey, especially for big datasets.

- Managed spot training: spot instances give discounts but can face interruptions.

- Data pipeline efficiency: move or process data only when needed to avoid extra fees.

Customization is supported in the following services:

| Service | Customization Method | Pricing Model | Example Use Case | Cost Estimate |

| Amazon SageMaker | Full control: custom model training, tuning, and deployment | Pay per compute instance hour (GPU), storage, tuning jobs | Fine-tuning LLM on ml.g5.12xlarge for 10 hours | ~$350–$500 (compute + storage; inference billed separately) |

| Amazon Bedrock | Fine-tuning supported for select FMs (e.g., Claude, Titan, Cohere) | Per fine-tuning job (token-based) + hosted model pricing | Fine-tuning with 100K tokens | ~$10–$20 per training job (plus token-based inference costs) |

| Amazon Comprehend Custom | Custom entity recognition or classification | Based on training time, data volume, and model hosting | Training classifier on 10K labeled documents | ~$50–$200 (based on training size; hosting extra) |

| Amazon Polly Brand Voice | Custom neural voice creation via studio training | Requires AWS engagement and pricing is negotiated | Creating a custom neural voice with recordings | Estimation + costs for training and deployment setup*

* Polly Brand Voice pricing is not publicly listed |

Storage fees

Storing data, logs, or model artifacts brings monthly charges. Regular reviews help you avoid paying for unneeded data.

Here are the storage fees for the main AWS generative AI services. It is important to mention that not all services charge for storage separately. Some of them include these costs in other fees or use separate services for storage, like S3.

| Service | Storage-Related Fees | Example Pricing (Estimated) |

| Amazon SageMaker | Charges for EBS volumes + S3 model storage | – EBS: ~$0.10/GB-month (gp3)

– S3: ~$0.023/GB-month |

| Amazon Bedrock | No direct storage fees; storage may apply when using RAG with S3/OpenSearch | – OpenSearch EBS: ~$0.10/GB-month

– S3 (RAG docs): ~$0.023/GB-month |

| Amazon Q | No built-in storage fees; indexes data from external sources like S3, Confluence, etc. | – S3 for doc sources: ~$0.023/GB-month

– Embedding store (optional) varies |

| OpenSearch Vector Search | Storage charged for vector indexes (EBS), snapshots (S3), and document data | – EBS (data nodes): ~$0.10/GB-month

– S3 snapshots: ~$0.023/GB-month |

| Amazon Transcribe | Audio and transcript files stored in S3 incur storage fees | – S3: ~$0.023/GB-month for standard

– Intelligent-Tiering possible |

| Amazon Polly | No internal storage; audio files saved to S3 cost separately | – S3 (MP3/OGG/etc.): ~$0.023/GB-month |

| Amazon Textract | No storage costs for processing; input/output typically stored in S3 | – S3 scanned docs + JSON output: ~$0.023/GB-month |

| Amazon Rekognition | Face collections and custom labels incur storage; video inputs use temporary S3 | – Face collection: ~$0.001 per face/month

– S3: ~$0.023/GB-month |

| Amazon Comprehend | No direct storage; depends on S3 usage and model artifacts if using Comprehend Custom | – S3 input/output: ~$0.023/GB-month

– Custom models: pricing varies |

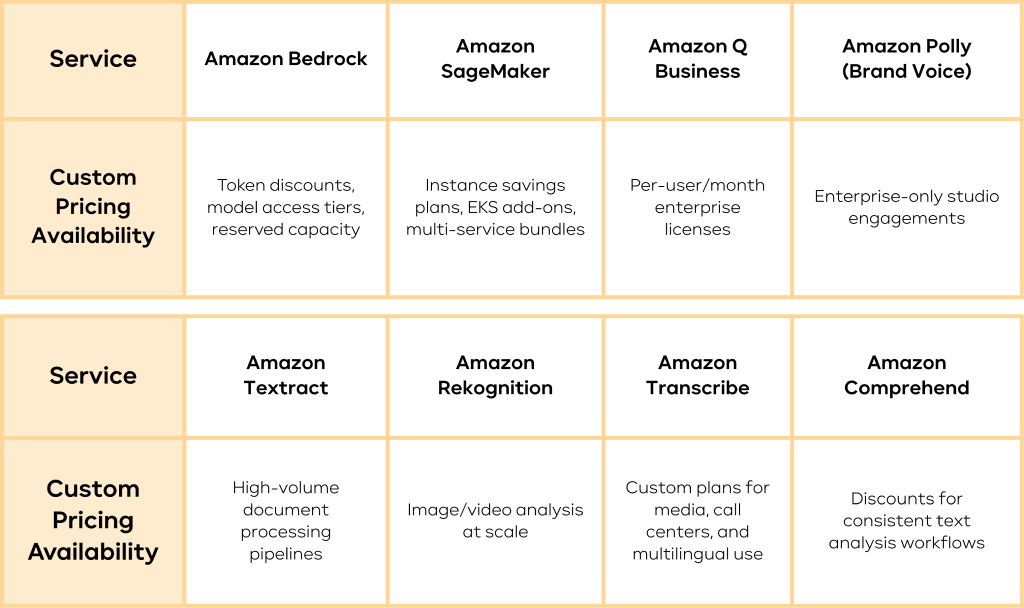

Custom pricing

If you need a special setup for huge volumes or strict compliance, you can get a custom deal from AWS.

Take a look at what is eligible for custom pricing from AWS:

How pricing varies based on usage, data storage, and compute time

The total generative AI cost depends on more than the number of requests. Data size, model complexity, and compute time also play a part. For instance, a high-throughput text-generation platform will cost more in CPU/GPU usage than a lightly used image recognition pipeline. Plus, large amounts of stored vector embeddings can increase storage fees.

Take a simple example: a social media platform uses AI to moderate content. They store user photos, run them through a classification model, and save embeddings for future search. The cost of generative AI comes from:

- Storage: Images and embeddings in S3 or an OpenSearch index.

- Compute: Real-time scanning of new posts.

- Maintenance: Retraining the model as user content evolves.

Understanding these pieces helps you predict and reduce unexpected bills.

Factors affecting AWS Generative AI pricing

Specific items shape your monthly statement. By knowing these, you can adjust usage to suit your budget.

Number of queries

Each query costs money. Whether you run a chatbot or a content filter, every API call accumulates fees.

If some features get little use, consider disabling them or limiting them to high-demand windows.

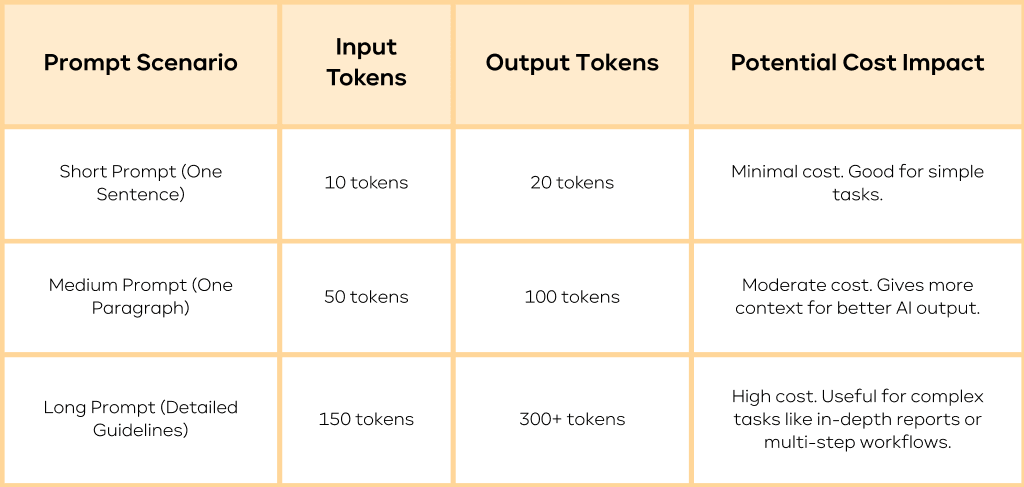

Tokens used for input processing

Language tasks rely on tokens. The length of your prompt affects the cost of generative AI. A longer prompt consumes more tokens.

“Write a product blurb for shoes” is cheaper than a giant block of brand guidelines plus style notes.

Tokens generated in the output

Output tokens also matter. If your application needs detailed content, you will incur a higher cost of generative AI.

Adjust max output length to keep expenses under control. Summaries can be concise.

Look at the example of token costs based on prompt/response length:

The size of your prompt and the response both affect the cost of generative AI. When your application does not need excessive detail, keeping prompts shorter can help you save.

Costs associated with vector embeddings

Some services convert text or images into vector embeddings for semantic tasks. Storing and indexing them can be costly at scale.

Practical advice:

- Dimensionality: use smaller embedding sizes if possible.

- Selective storage: only embed data that you query often.

Expenses for maintaining a vector database

You pay for hosting, indexing, and querying in a vector store like OpenSearch.

As data grows, so does the cost of generative AI. Think about archiving old embeddings or using cheaper tiers for less critical data.

Security and compliance with Amazon Bedrock Guardrails

Compliance features and extra security steps may add overhead. If you handle sensitive info, you might need advanced guardrails.

Choose only the necessary compliance features. This keeps you compliant without blowing the budget.

Techniques for efficient data chunking

Breaking large documents into smaller parts can lower token usage. This approach focuses on relevant text instead of processing everything at once.

Implementation examples:

- Chunk size: split text into 1,000–2,000 tokens.

- Metadata: tag each piece with context. Retrieve only the needed segments for your queries.

How to optimize AWS Generative AI costs

McKinsey research indicates that adopting AI can boost efficiency by up to 30%. Yet it warns that poor cost governance can sabotage returns.

Balancing high performance with budget control is possible. Look at your usage patterns and refine how you store, query, and train. Design an architecture that fits actual needs rather than maximum possible loads.

Look at the ways to optimize the cost of generative AI:

- Right-sizing instances: do not over-provision compute if your usage is moderate.

- Automate cleanups: delete or archive unused data to lower storage fees.

- Leverage spot instances: for tasks that are not time-sensitive, spot instances can cut compute expenses.

- Watch for new AWS updates: price reductions or new instance types can appear at any time.

- Try a few pricing plans: test them to see which offers better value.

At the same time, you should balance optimization with performance. Here are a few best practices:

- Caching inferences. If queries repeat often, cache your responses. This reduces repeated calls for the same content.

- Load testing. Before you go live, see how the system behaves. Identify speed bottlenecks and cost hot spots.

- Iterative development. Start small with a minimal version. Grow in stages, adding resources only when justified by results.

Tips for managing costs effectively

Below are tried-and-true ideas for staying on track with the cost of generative AI. These steps help keep your AI ambitions aligned with your budget.

Using AWS Cost Explorer and AWS Budgets for monitoring and controlling expenses

Gartner found that “unplanned cloud costs” rank high among AI adopters. Tools like AWS Budgets or AWS Cost Explorer lessen these surprises.

AWS Cost Explorer: Gives you charts and trends of your spending. Identifies cost drivers at a glance.

AWS Budgets: Lets you set custom thresholds and sends alerts if you approach them.

Key features:

- Forecasting: predict next month’s cost of generative AI.

- Resource tagging: group costs by projects or teams with tags.

- Actionable insights: spot unusual spending quickly to fix any issues.

Recommendations for choosing the right pricing plan and optimizing resource usage

Compare models like pay-as-you-go and subscriptions to decide which fits your needs. Consider:

- Consistency of usage: steady volumes can benefit from fixed-price options.

- Growth plans: if you will scale up, confirm that your model supports bigger loads without hidden costs.

- Auto scaling: set triggers to shrink or expand resources based on real-time demand.

To optimize resource allocation, do the following:

- Capacity reservations: reserve capacity for stable workloads to potentially get lower rates.

- Testing and validation: start with small workloads. Track usage patterns, then forecast for full production.

Conclusion

Today’s AI-driven world calls for flexible and smart AWS generative AI pricing. You do not need a single plan for every situation. Instead, you can choose from Pay-as-you-go, Custom Pricing, or other structures to match your needs. By managing requests, chunking data, picking the right instance, and watching your usage, you can control the cost of generative AI on AWS. You also stay free to scale as your project grows.

Unleash AWS generative AI without overspending!

Receive expert help with designing and optimizing AI architectures to meet your goals at a fair cost.

FAQ

How expensive is it to maintain a vector database?

Storing large volumes of embeddings can raise storage costs. You also need indexing and compute resources for queries. Keep an eye on how often you search those vectors. Storing rarely used embeddings can drain your budget. A tiered strategy can help: use fast storage for high-demand data and cheaper storage for older items.

By following these practices, you will handle the cost of generative AI more effectively. AWS provides the tools and resources to build advanced AI. You can fine-tune solutions, secure your data, and only pay for what you use. With constant reviews and smart architecture, you can harness modern AI capabilities while avoiding surprise bills. Stay alert to AWS updates, refine your resource usage, and adopt best practices like chunking or caching. This approach will keep you on track for scalable AI success – without the inflated cost of generative AI.

What are the key services offered by AWS for Generative AI?

AWS covers many tasks, from model training (SageMaker) to advanced text processing (Comprehend) or vector searching (OpenSearch). Amazon Bedrock handles foundation model management, while Amazon Rekognition deals with image and video classification. These services let businesses adopt generative AI in many ways.

What techniques can be used for efficient data chunking to reduce costs?

Data chunking involves breaking files into smaller segments, then tagging them with context. You only process the relevant chunk for a query. You can code chunking in AWS Lambda or a similar service. By focusing on necessary text, you reduce token usage and speed up tasks.