50% faster document analysis and decision making due to the integration of a secure and self-hosted AI solution

Client’s result

Industry |

Retail Apparel & Fashion

Company size |

51-200 employees

Service | AWS consulting, AWS generative AI services

Location | USA

About the client

Main challenges

Challenge 1

- J.Hilburn has many confidential financial and other business documents. Analyzing them took a lot of time due to unoptimized manual processes.

- The client realized that they might need an AI-based solution for this. They had an idea of what it would look like. Their team required professional help to build and deploy this solution. The main goal was to enable accurate analysis and efficient decision making through process automation and optimization.

Challenge 2

- The client required a private and self-hosted AI-based solution. They didn’t want a proprietary solution like OpenAI due to security concerns.

- Proprietary models can be trained using data their users share and upload there. The level of user data usage depends on a pricing plan. The higher the plan is the less user information a proprietary model takes for training. However, the client didn’t want to share any information at all.

What we did

Solution 1

A self-hosted AI solution with accurate and efficient document interaction

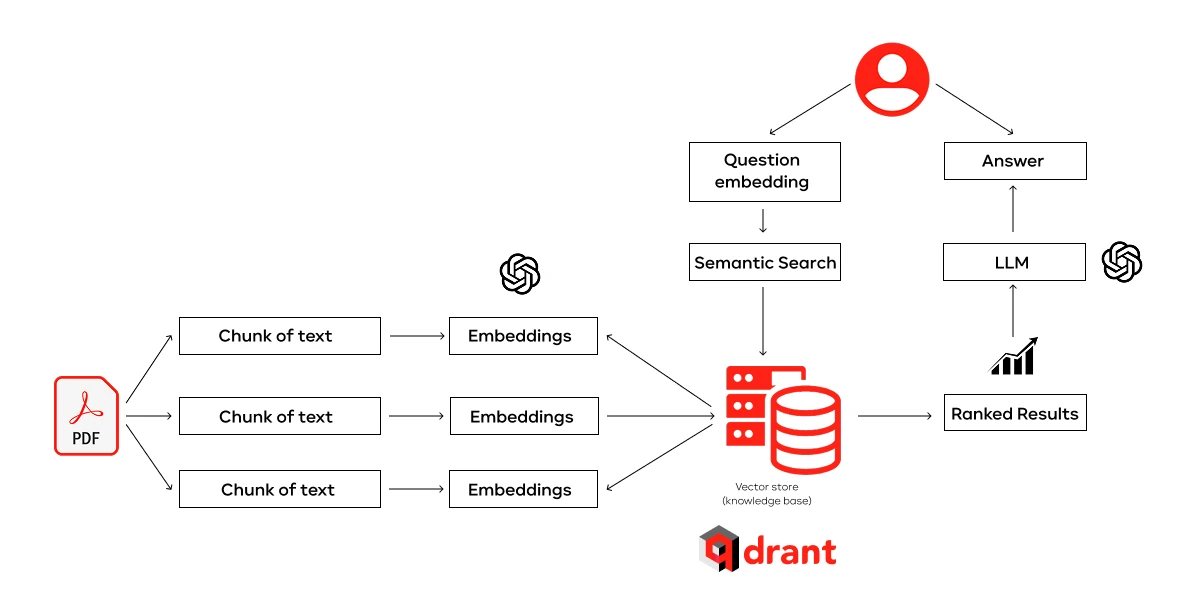

- Provide efficient document similarity search

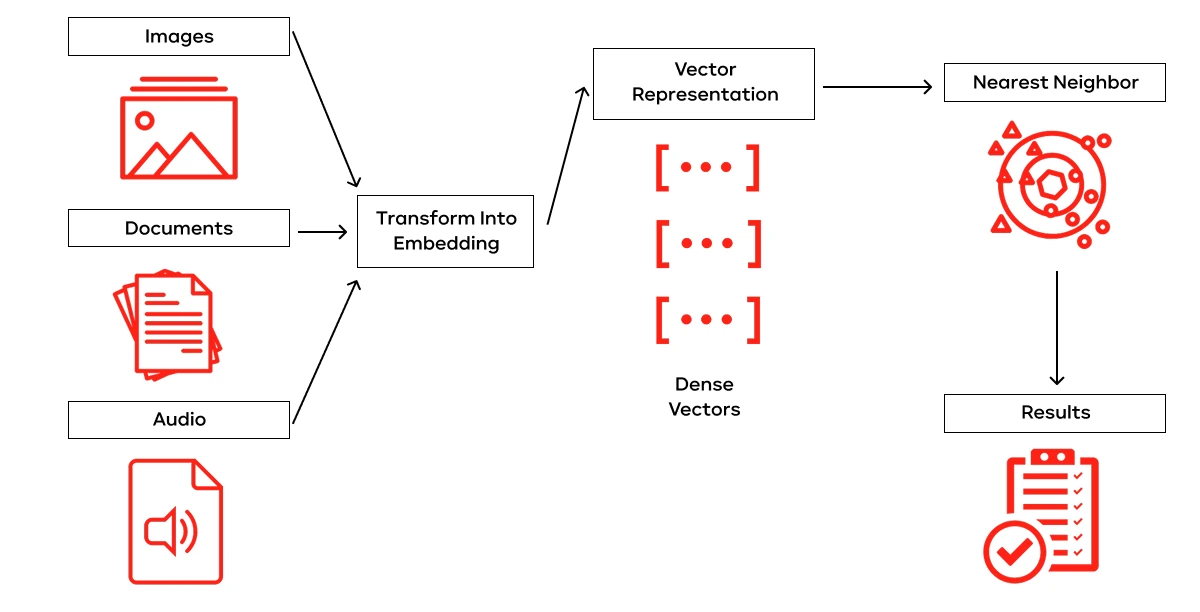

Interacting with large volumes of unstructured data requires certain configurations. A system has to facilitate effective document interaction. For this, it needs to be capable of rapid and accurate similarity searches.

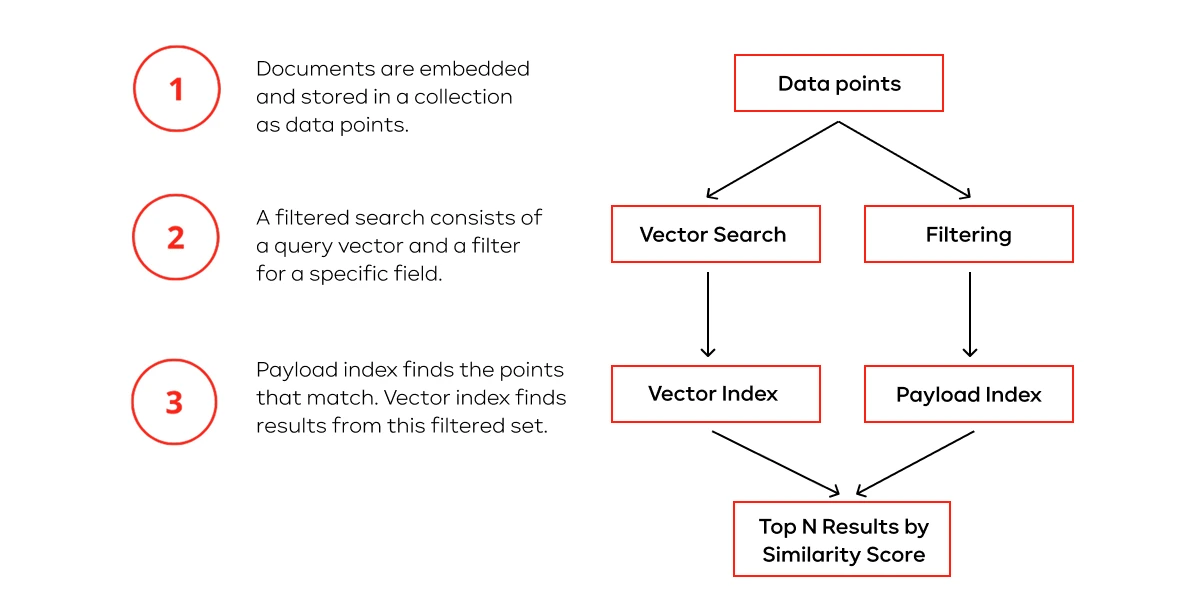

To address this, we implemented Qdrant, an open-source vector database. It is designed for high-performance vector similarity search.

Qdrant conducts vector similarity search well due to the following features:

- Vector indexing techniques. Used for fast nearest neighbor search by organizing vectors in a graph structure.

- Real-time updates to the vector indices. This allows insertions, deletions, and updates without major downtime.

- Optimized storage. It manages large volumes of vector data efficiently. This ensures quick read and write operations.

- Hybrid search that combines vector similarity with traditional filtering capabilities.

- Parallel processing and modern CPU architectures for search performance optimization.

- API accessibility to integrate vector search functionalities into applications with minimal overhead.

- Deployment in distributed environments. It results in scalability as the demand for processing and storage grows

Qdrant’s advanced compression techniques and distributed, cloud-native design allow for efficient handling of high-dimensional vectors, enabling quick access to relevant information within documents.

- Implement accurate and private text embeddings

Our experts deployed a self-hosted embedding model from Hugging Face. Hugging Face provides a variety of pre-trained open-source models. They can be fine-tuned to capture the semantic nuances specific to a domain.

Other advantages of using models from Hugging Face are:

- Many models are based on the latest research and deliver exceptional performance.

- There are APIs on the platform that simplify model fine-tuning and deployment.

- A strong community that provides support through forums and documentation.

- Hugging Face models are compatible with other machine learning frameworks.

- There are tools and services that help to deploy models to production.

- There are regular updates and model releases on the platform.

It was important to choose the right model. This choice influences the accuracy of information search in a database. Our solution has been correct. It improved the accuracy of tasks like semantic search and document clustering. It also maintained data privacy within the organization’s infrastructure.

Solution 2

Well-configured data privacy and security

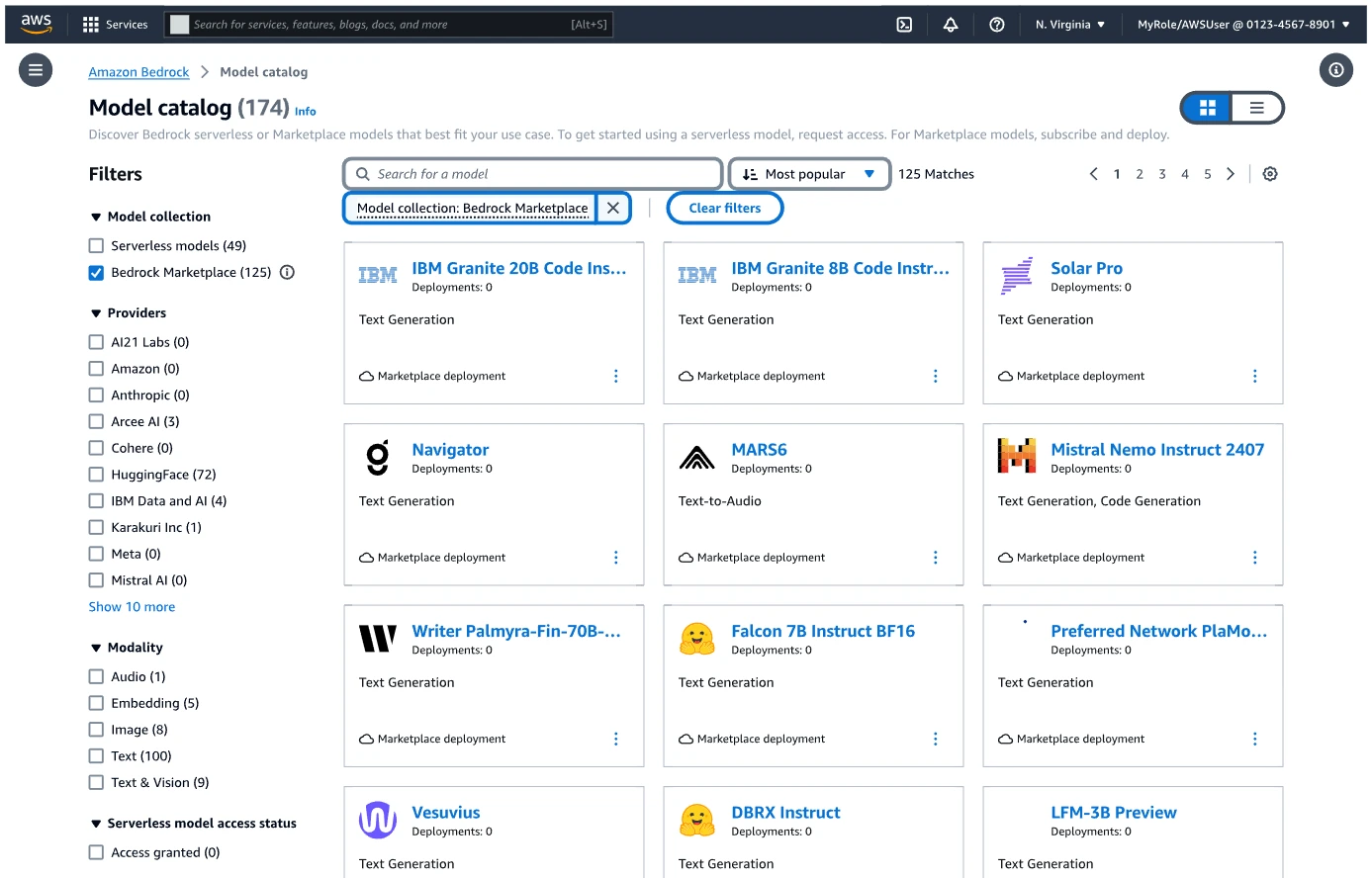

IT-Magic’s team used Amazon Bedrock's foundation models. This service prioritizes data privacy and security. Amazon Bedrock never uses customer data for service improvements. It also doesn’t share it with third-party model providers.

The other reasons we chose Bedrock are:

- The service had ready-made models that were suitable for the project.

- It provides immediate access to models that don’t need extensive setup or training.

- Reduced complexity of model training and fine-tuning.

- The use of foundation models on a pay-as-you-go basis.

- Optimization for rapid development and deployment of generative AI applications.

- Managed service convenience for generative AI.

- Bedrock is easier to use than Amazon SageMaker.

Apart from the advantage for data privacy, Bedrock ensured security in more ways:

- The use of strong data encryption measures, both at rest and in transit.

- Integration with AWS Identity and Access Management.

- Audit trails and logging for monitoring access and changes to Bedrock instances.

- Regular security upgrades in the service.

- Alignment with AWS security best practices.

Key Results and Business Value:

1. Accelerated decision-making processes by enabling secure, AI-driven document analysis by 50%.

2. Enhanced data security and compliance through a self-hosted infrastructure

3. Up to 50% cost efficiency with self-hosted embeddings. It is valid in case of high solution usage (at or above 50% capacity).

Features Delivered:

1. AI-powered document processing and interaction capabilities.

2. Secure, self-hosted environment for handling sensitive data.

3. Robust similarity search functionality for efficient document comparison.

Technologies we used

A ready-made open-source solution for document analysis.

Amazon Bedrock:

Foundation models for AI processing.

Qdrant

Self-hosted vector database for similarity search.

Amazon EKS

Managed Kubernetes service for application deployment.

Amazon RDS for PostgreSQL

Database for storing conversations.

VPN Access

Secured network access to the private infrastructure.

Client’s feedback

Yatit Thakker

Sr. Product Manager - AI & ML Innovation

A few words from IT-Magic

We enjoyed working with J.Hilburn. Our team appreciated Yatit’s clear communication and task setting. This contributed a lot to delivering the right results and overall impression about each other. We hope to work together again in the future.

Other case studies

Let’s make your infrastructure efficient, scalable, and secure!

Contact IT-Magic for a consultation and we will find the best solution according to your technical and business needs.