We can all agree that making machine learning run smoothly in your organization can feel tricky. Yet, with the right steps, you can simplify each stage of your project and avoid common pitfalls. One of the best ways to do this is by following a clear path. That’s where Amazon SageMaker best practices come in. They help you build, train, and deploy your ML models faster and more precisely.

In this guide, you’ll discover effective ways to enhance your workflows. You’ll learn how to simplify data preparation, optimize training, ensure security, and make informed decisions for scaling. By the end, you’ll have a set of concrete steps to raise the performance of your machine-learning models. Let’s get started.

What Is SageMaker?

Amazon SageMaker is a fully managed machine learning service from Amazon Web Services. It simplifies the process of building, training, fine-tuning, and deploying machine learning models on a large scale. Instead of spinning up your own servers and juggling complex infrastructure, you get a single platform that handles most of the heavy lifting.

SageMaker works well with other AWS offerings. It integrates with Amazon S3 for data storage and AWS CloudWatch for logging metrics. It also allows you to connect directly to data sources such as Amazon Redshift and Amazon DynamoDB.

You don’t need to be a deep expert to see the value here. SageMaker aims to cut down on the complexity of ML projects. It offers Jupyter Notebook instances, pre-built algorithms, and streamlined workflows. This means your team can focus on what matters—solving business problems and delivering strong model prediction accuracy—rather than fighting with infrastructure. With the right approach, SageMaker can make ML a natural part of your day-to-day operations.

Importance of following best practices in ML model development

In machine learning, best practices keep your projects on track. They help ensure your models perform well, deliver reliable inference, and scale without blowing up costs. Think about it: A well-trained model might still fail if you don’t handle data quality issues. A secure system might still run into trouble if you don’t follow standard steps to guard your endpoints. To produce meaningful results, you need to follow a blueprint that guides each stage.

For example, consider a healthcare company using ML to predict patient readmissions. If they follow SageMaker best practices, they’ll clean and preprocess data well. They’ll tune hyperparameters smartly and test their models before deployment. By doing this, they reduce errors and produce accurate predictions. This leads to better patient care and improved trust in the AI-driven system.

In another case, a logistics firm might leverage Amazon SageMaker best practices to optimize routes for delivery trucks. By using best practices for data handling, training, and deployment, they can cut shipping costs and improve on-time performance.

This is not just theory. Real companies have cut model training costs, shortened project timelines, and built more stable ML pipelines by applying these proven techniques.

For example, Kroger, a grocery retailer, uses SageMaker to forecast demand and manage inventory. This has helped them to cut costs and streamline operations. One more case is Johnson & Johnson. This pharmaceutical corporation applied AWS SageMaker best practices to improve the processes of clinical trials. This reduced time and cost for data analysis.

Core principles of using Amazon SageMaker

To get the most from SageMaker, it’s wise to follow core principles that guide your entire ML journey.

- Simplicity over complexity

Avoid overengineering. Use built-in tools and automation to streamline workflows. Don’t build everything from scratch if SageMaker offers a ready-made solution.

- Scalability and flexibility

Choose the right instance types and leverage features that let you scale up or down. SageMaker can adjust resources based on load. This makes it easier to handle both small and large projects.

- Continuous monitoring and iteration

Models need upkeep. Monitor them in production using tools like CloudWatch and SageMaker Model Monitor. Adjust as needed to maintain top performance.

- Security and compliance

Follow AWS SageMaker security best practices to keep data safe. Use IAM roles, encryption, and compliance checks.

Focusing on these principles makes your environment reliable and costs manageable.

_______________________________________________________________________

Learn how other platforms stack up. Discover new insights to shape your strategy.

Check out this guide on the Best AI Cloud Platforms.

_______________________________________________________________________

Benefits of using SageMaker for model development and deployment

SageMaker reduces heavy lifting. It manages infrastructure, offers pre-built algorithms, and supports automatic scaling. For example:

- Reduced infrastructure management. Let AWS handle servers and updates. Focus on your model logic, not hardware.

- Cost efficiency. Pay only for what you use. Shut down idle notebook instances. Scale inference endpoints on demand.

- Auto-scaling. Adjust resources to match the workload. This means you spend less when demand dips and maintain performance when it spikes.

- Pre-built algorithms. Get started faster with a catalog of built-in models for classification, regression, or image recognition.

These features mean you can spend more time improving model quality and less time on grunt work.

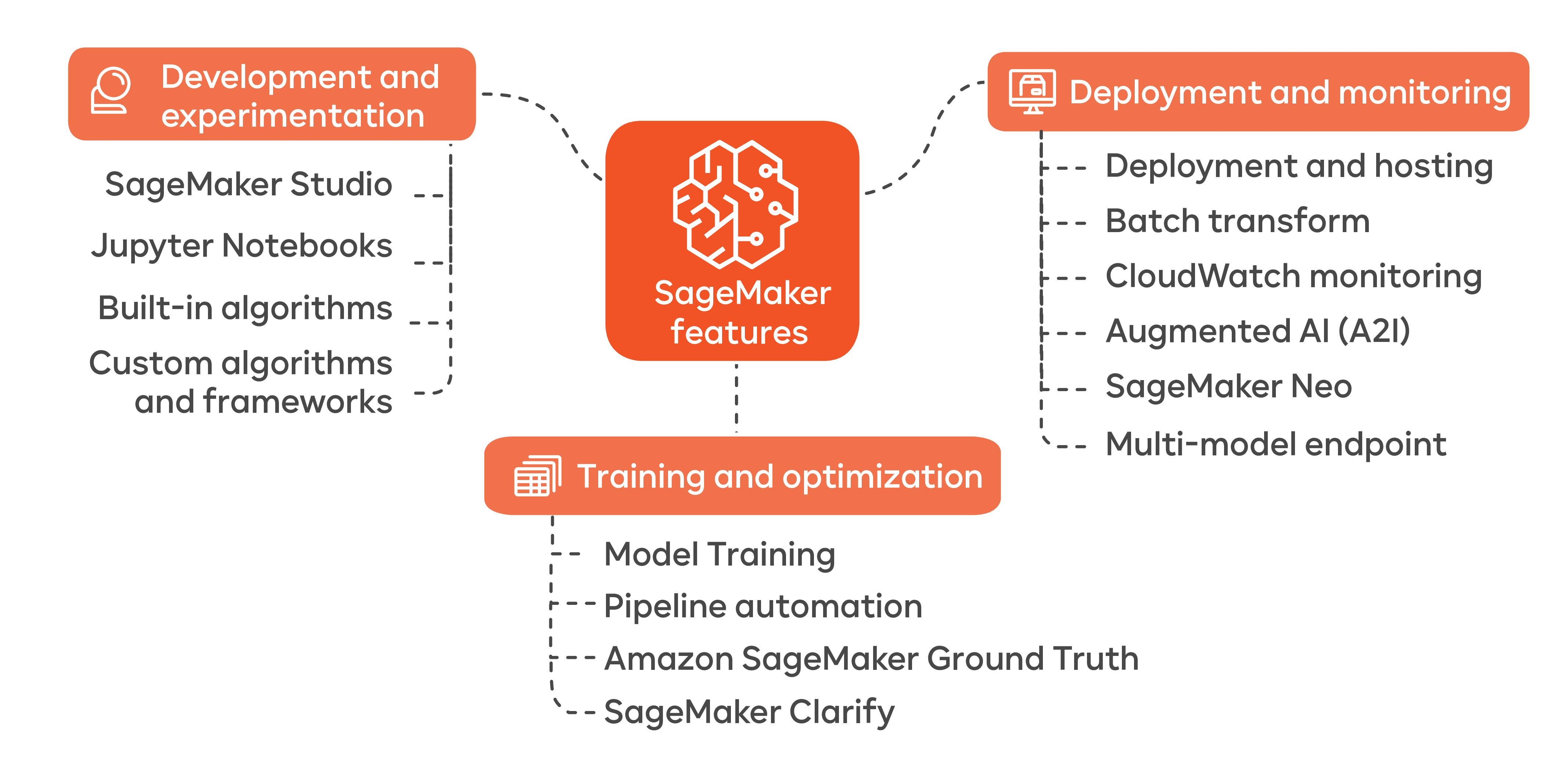

Overview of key features and capabilities of the platform

SageMaker offers many features to speed up your ML workflow:

Let’s explain the key features in more detail.

- SageMaker Studio: A fully integrated development environment. It offers a single pane of glass to build, train, and deploy. Following SageMaker Studio best practices means you can track experiments, debug code, and collaborate with your team more effectively.

- Autopilot: Automate model selection and tuning. This saves time and ensures robust results.

- Built-in algorithms: Use ready-made solutions for image recognition, text analysis, or forecasting. There is no need to reinvent the wheel.

- Data labeling: SageMaker Ground Truth simplifies labeling tasks. This makes large-scale labeling projects more efficient.

All these capabilities help you produce better models faster. They also reduce guesswork, and enable more consistent outcomes.

Best practices for data preparation

Data is the fuel for your model. Poor data leads to weak predictions. Great data sets the stage for robust performance.

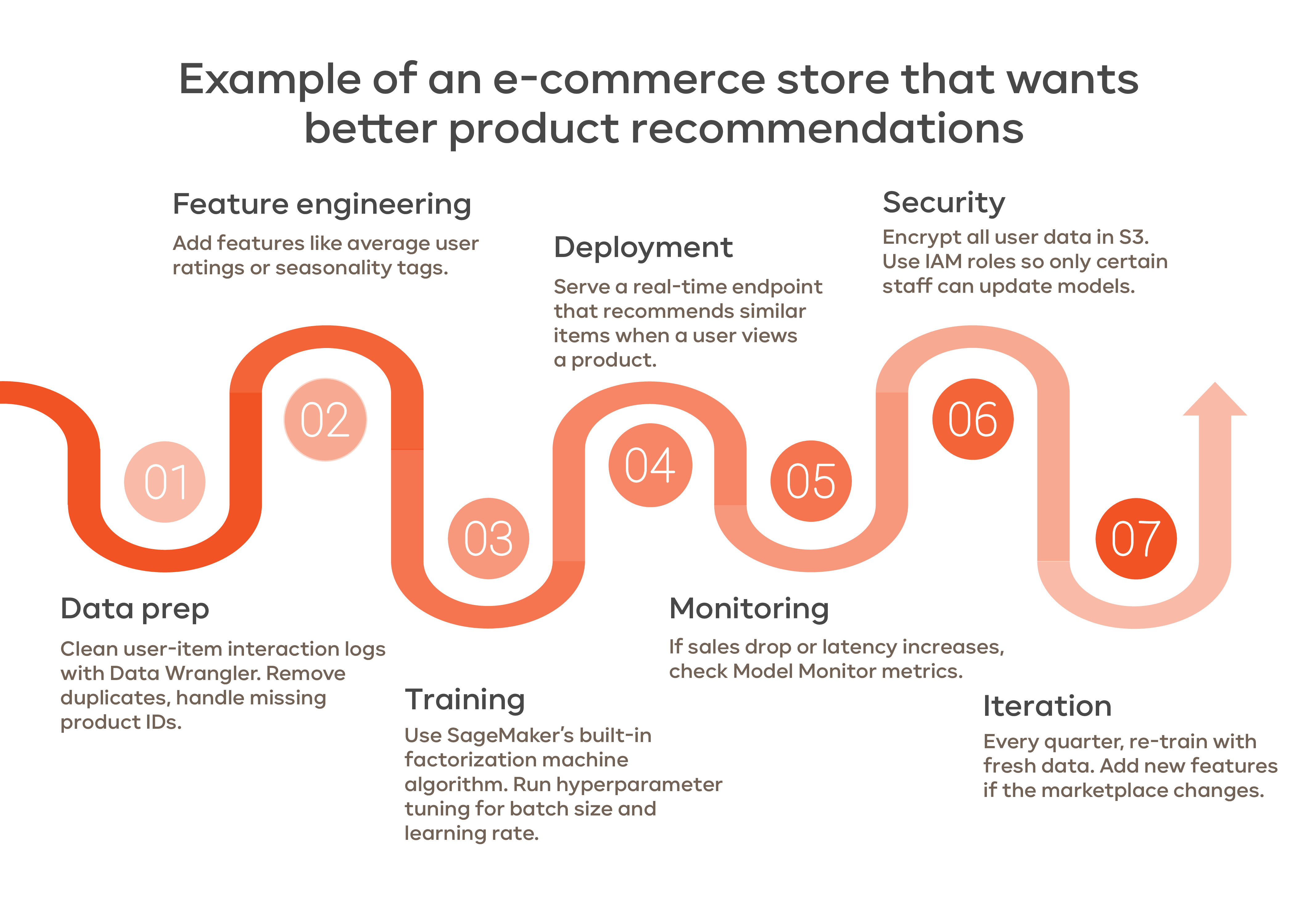

Data cleaning and preprocessing

You must clean your data to fix errors and remove noise. For example, if your dataset has misspelled categories or missing values, correct them before training. Simple steps, like removing duplicates, can improve model accuracy. Clean data leads to a stable environment for your models to learn.

Data cleaning means checking for outliers and handling missing values. One method is median imputation. Another is dropping rows with too many gaps. If dealing with multiple data sources, unify formats. For instance, ensure date formats match and product IDs align across databases. Consistency here prevents headaches down the road and preserves data quality.

Feature engineering

Feature engineering transforms raw data into more meaningful inputs. Suppose you have timestamp data. You can extract hour-of-day or day-of-week features. These new features can help the model find patterns. This step often increases accuracy and simplifies things.

Sometimes, it’s useful to join external data sources. Adding weather info to sales data might reveal seasonal trends. Try encoding categorical variables or scaling numeric features. Consider dimensionality reduction techniques like PCA for large feature sets.

Using SageMaker Data Wrangler

SageMaker Data Wrangler helps you with data prep. It offers a visual interface to select data, apply transformations, and analyze quality. This tool speeds up your workflow and simplifies trying out new features. It also integrates well with the rest of the platform, so you can quickly move from data prep to training.

Data Wrangler also works with SageMaker Processing Jobs. Define transformations once, then process large datasets on an elastic cluster without manual setup. For massive datasets, this approach saves time and reduces complexity.

Recommendations for model training

Model training is where you turn data into a working ML system. You will avoid repeated failures by applying Amazon SageMaker best practices from the start.

Hyperparameter tuning

Hyperparameters are model settings you tweak before training. Get them right and you will boost accuracy. SageMaker’s built-in hyperparameter tuning jobs let you run multiple training jobs with different settings in parallel. This approach saves time and often yields more precise models. Monitor results in real time and pick the best combination.

Distributed training

Large datasets or complex models can slow training. Distributed training splits the workload across multiple servers. SageMaker supports distributed training out of the box. This makes it easier to handle huge datasets without grinding your workflow to a halt. When you need scale, SageMaker provides the building blocks to ramp up.

Experiment tracking

It’s easy to lose track of past runs and versions. Keep logs of your experiments, models, and settings. SageMaker Studio provides built-in tools for versioning and experiment tracking. This ensures you can reproduce results, compare models, and avoid repeating past mistakes.

Best practices for model deployment

Deploying a model is not the end of the story. You must choose the right endpoint configuration, run tests, and manage scaling.

Choosing the right deployment architecture

SageMaker lets you deploy in several ways:

- Real-time endpoints: For low-latency predictions, such as chatbots or recommendation engines.

- Batch transforms: For periodic processing, like generating predictions for an entire dataset overnight.

- Edge devices: Deploy models on IoT devices for local predictions. This reduces latency and sometimes costs.

Pick the architecture that fits your use case. For instance, a fraud detection system that needs instant responses should use real-time endpoints. A monthly sales forecast can run on a batch job.

A/B testing and continuous integration

Avoid relying on guesswork. A/B testing puts two versions of a model against each other to see which performs better. Continuous integration ensures you deploy small updates often. This reduces risk. It also helps you catch errors early. When you adopt these Amazon SageMaker best practices, you maintain a stable pipeline that improves over time.

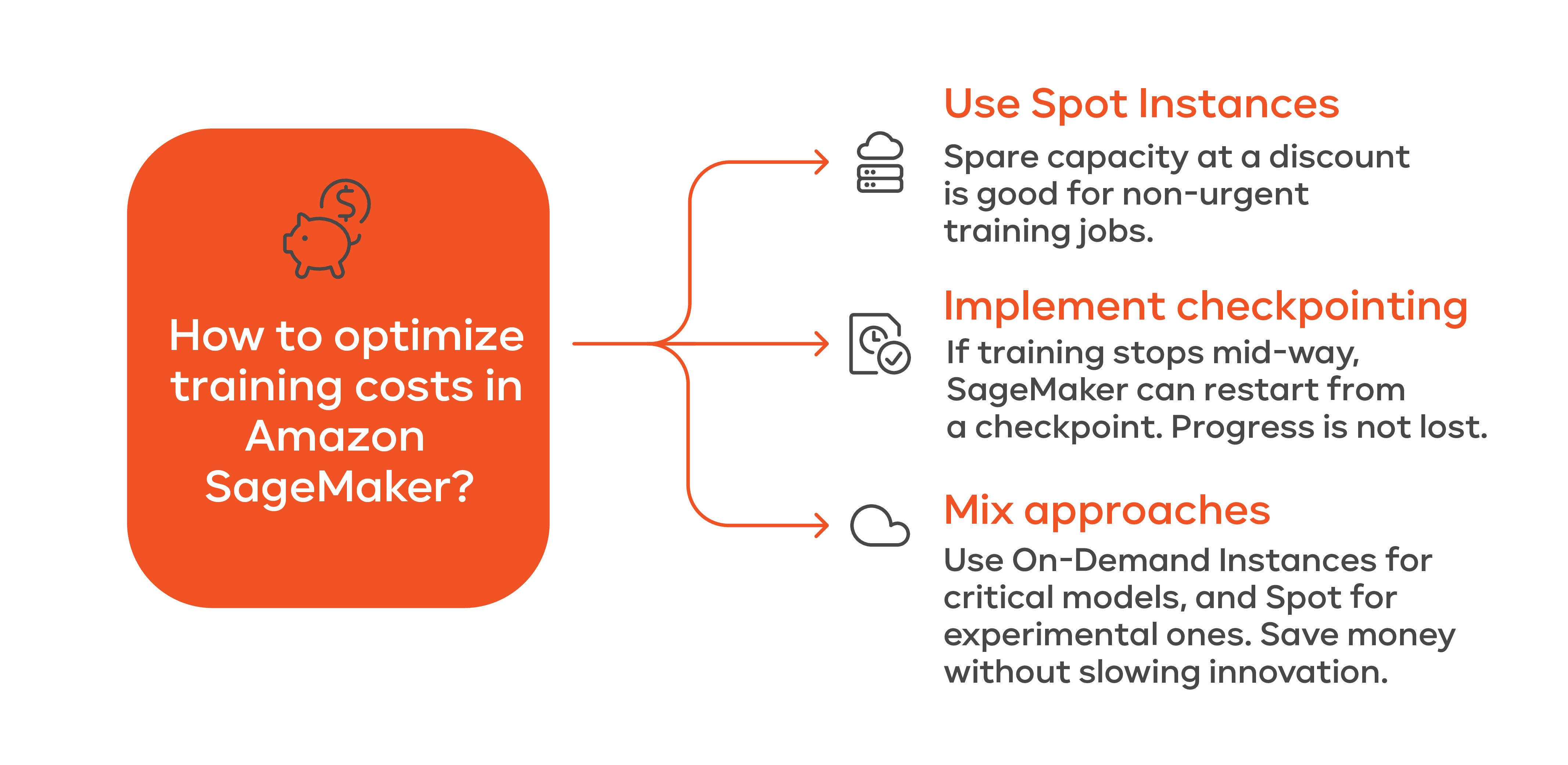

Autoscaling and cost management

Scaling up or down is key. By using SageMaker’s autoscaling features, you ensure you’re not overpaying for unused compute power. For example, if your traffic drops at night, SageMaker can scale back your endpoints. This ensures you pay less while still meeting performance needs. For more details on cost optimization, see AWS Cost Optimization Best Practices.

_____________________________________________________________________________

Looking to unlock the full potential of your ML workflows?

Consider expert guidance. Check out IT-Magic’s AWS Generative AI Services to see how you can better harness SageMaker’s capabilities to boost your results even further.

_____________________________________________________________________________

Monitoring and supporting models

Your job doesn’t end after deployment. Models drift over time. Data changes. Trends evolve. That’s why continuous monitoring is crucial. Tools like SageMaker Model Monitor watch for data quality and drift. CloudWatch logs and metrics can alert you when something’s off. By acting on these alerts, you maintain model accuracy and avoid unpleasant surprises.

Monitoring involves more than just raw metrics. Set thresholds so you know when latency or error rates spike. Model Monitor can compare production data against the baseline used during training. If feature distributions change, you’ll know that user behavior has shifted. Catching these shifts early allows you to retrain or fine-tune models before performance suffers.

Also, consider third-party monitoring tools like Datadog or Prometheus. They can offer richer graphs and custom dashboards. This makes your ML workflow more transparent and resilient. With constant feedback loops, you keep improving, ensuring your models deliver consistent value.

AWS SageMaker security best practices

Security should never be an afterthought. AWS SageMaker security best practices ensure that your models and data remain protected.

Encryption, access control, and compliance checks form the backbone of secure ML workflows. The good news: SageMaker integrates well with AWS security tools and makes it straightforward to set up a robust security posture.

Encryption

Encrypt data at rest and in transit. Use AWS Key Management Service (KMS) to handle keys. Ensure all data stored in Amazon S3 or moving between services is encrypted. This keeps it safe from unauthorized access. For detailed instructions, see the AWS SageMaker Documentation.

Also, ensure you use HTTPS connections for endpoints. This prevents anyone from intercepting data. Combined with encryption at rest, you have a strong baseline of security.

Access management

Use AWS Identity and Access Management (IAM) roles to control who can access SageMaker. Grant the least privilege. Give data scientists access to training jobs, not IAM role editing. Restrict who can create endpoints. Assign roles to teams based on their tasks. This reduces the risk of human error and misuse.

Store sensitive keys in AWS Secrets Manager, not hard-coded in code. This prevents credentials from leaking into logs or version control systems.

Compliance and audit

Check compliance standards that apply to your industry. If you handle healthcare data, follow HIPAA guidelines. For finance, consider PCI DSS. AWS offers a range of compliance programs. Integrate AWS Config to track resource changes. AWS CloudTrail logs API calls, letting you audit actions over time.

Run regular audits. Compare your environment against baseline policies. If something deviates, you’ll know immediately. This builds trust with customers and stakeholders. They see you care about data protection and regulatory requirements.

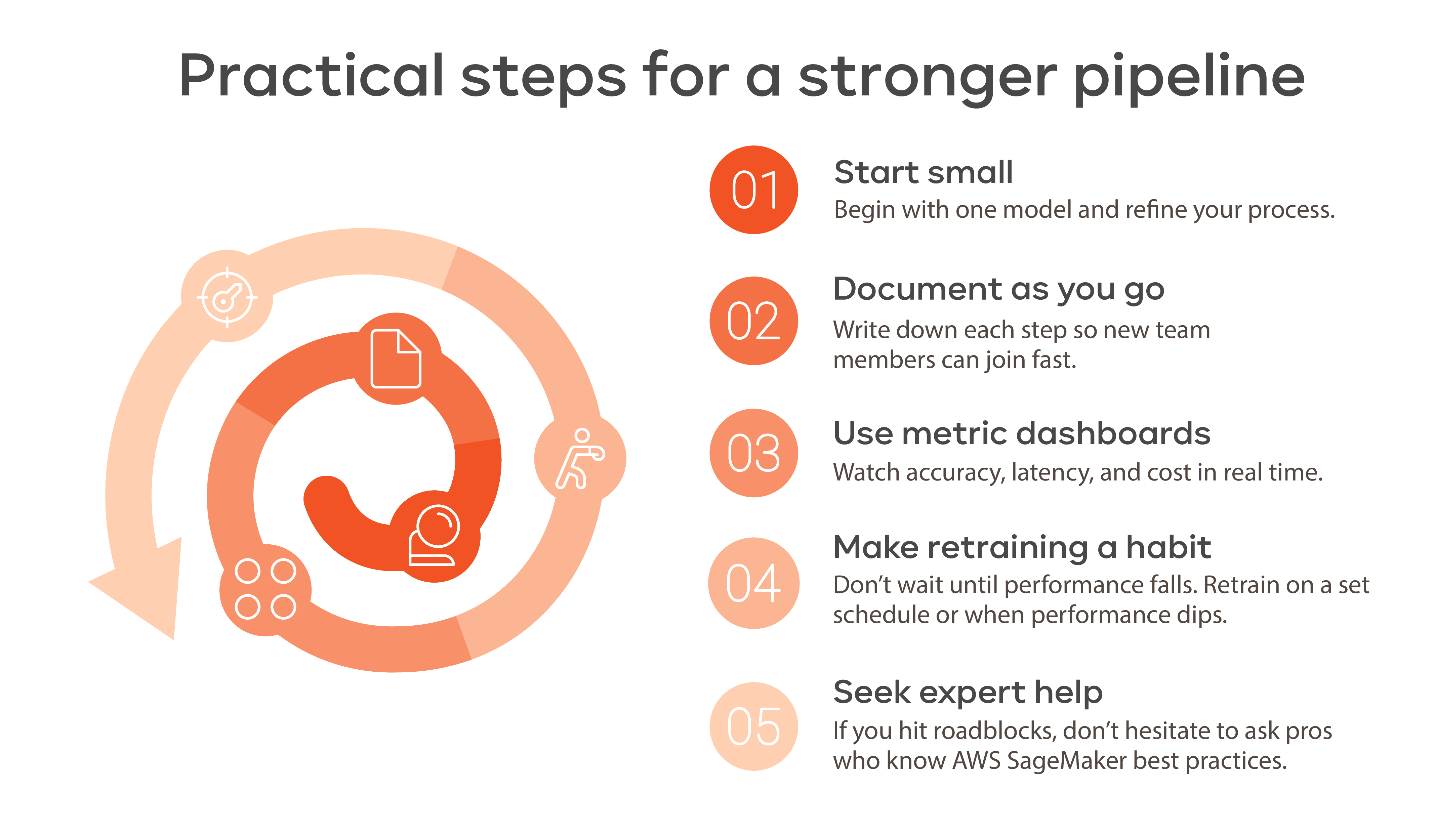

Conclusion

By following these Amazon SageMaker best practices, you make machine learning more predictable and effective. Start with solid data preparation, then tune models with care. Deploy them with the right architecture and keep watch for changes. Guard your data with strong security. Each step makes your ML projects more valuable.

Better practices lead to better models. Save time, lower costs, and boost accuracy. Your team can focus on insights, not guesswork. Embrace SageMaker best practices, and turn complexity into clarity.