We live in a time when artificial intelligence powers automation, pattern recognition, and predictive insights across many domains. Still, big models can be expensive, slow, and unwieldy. AI model optimization offers a chance to trim, tune, and reshape these models. By doing so, organizations can preserve accuracy while using fewer resources.

Because AI is in almost every industry, the call for efficient and adaptable systems has grown. AI model optimization meets this demand. It helps create smarter solutions that do not waste energy or time. This article will uncover the value of artificial intelligence optimization and outline critical model optimization techniques. We’ll also see real-world examples where AI optimization brings powerful benefits.

Understanding model optimization

AI model optimization involves boosting the output of your AI models while cutting needless complexity. This approach fine-tunes structures, parameters, and training steps. Done well, optimizing a model lets you handle shifting workloads and real-time tasks without skyrocketing costs.

Importance in AI development

Why is model optimization a key factor in AI development? First, an effective strategy ensures your AI system uses minimal resources for the best performance. Second, optimized models often adapt better to changing data. They are also less likely to break under stress. Common improvements include:

- Reduced inference time

- Better accuracy thanks to careful regularization

- Lower memory and computational costs

Researchers often find that well-pruned and well-tuned models can rival or surpass bigger, unoptimized versions. A Google Research study showed how selective pruning preserves accuracy while making networks more lean. These findings stress how optimizing a model is vital in modern artificial intelligence.

How model optimization contributes to faster processing, reduced resource usage, and improved performance

Picture a neural network with millions—or billions—of parameters. Even with powerful hardware, that’s hefty. But if you apply quantization AI, you switch 32-bit floats to 8-bit integers. This step alone can cut memory usage by four times. That means faster computation and easier deployment.

Model pruning also helps remove connections or neurons that add little value. This cutback leads to swift inference and a reduced carbon footprint. Such gains matter in large-scale operations. When you handle tasks like anomaly detection, finishing the job in half the time saves money and energy.

Significance of optimizing AI models

AI model optimization is not a luxury. It’s a must-have step for any group that wants to boost output while controlling spending. Optimized models bring both short- and long-term benefits. Below, you will find the most significant advantages.

Boosting performance and operational efficiency

For tasks like classification, regression, or image analysis, AI model optimization can improve results. At the same time, you reduce the risk of wasted compute cycles. Improved operational efficiency shows up in:

- Faster development cycles, thanks to shorter training sessions

- Near-instant inference in real-time applications

- Smoother deployment across remote or edge devices

When you’re poised to react to new market data, every second counts. A sleek model is less costly and easier to maintain. That advantage applies to use cases in many sectors, from e-commerce to analytics.

Expanding use cases across various sectors

Artificial intelligence optimization doesn’t only interest tech giants. It now has broad relevance in fields like:

Retail & e-commerce: Optimized models transform and enhance operations faster. They boost personalization, inventory management, customer service, fraud detection, etc.

Healthcare: Efficient imaging models help detect tumors or other anomalies. Smaller hospitals, with fewer resources, can run these solutions on local devices.

Finance: Fast, optimized systems catch fraudulent transactions in real time. This upgrade cuts overhead costs and speeds up approvals.

Manufacturing: Real-time quality checks rely on rapid AI inference. Optimized models do this without large hardware setups.

By applying AI model optimization, you also pave the way for new kinds of applications that may have been too big or too costly in the past.

Ensuring relevance amid changing data environments

Data patterns can shift. A model trained on old data can falter in new conditions. With the techniques of AI model optimization, you retrain faster because your model is smaller or more flexible. As a result, you preserve strong performance during concept drift or seasonal changes.

Frequent re-optimizing also helps maintain your model’s value as inputs and user demands evolve. This ongoing process ensures your AI stays current and doesn’t lose potency. It’s a simple but powerful approach.

AI optimization (AIO) and process enhancement

AI optimization (AIO) covers many strategies for better modeling and improved workflows. You can tackle hyperparameter tuning, feature engineering, transfer learning, and more. Each step refines the AI pipeline for maximum results.

Tuning hyperparameters

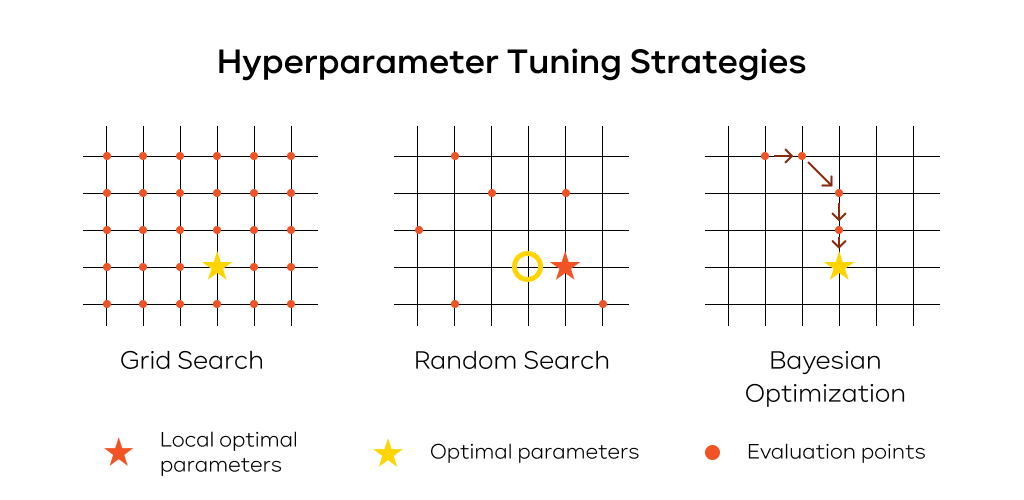

Hyperparameters guide how fast your model learns, how big the batches are, or how layers connect. Picking the right settings can be the difference between success and stagnation. Popular strategies include:

- Grid search: You pick ranges for each hyperparameter and test all possible combos.

- Random search: You select random values within given limits. Sometimes, it finds ideal settings faster than a full grid search.

- Bayesian optimization: You select the most promising parameters for evaluation. Its aim is to apply fewer assessments while finding the best hyperparameter.

In each method, performance metrics help you see which combo works best. The outcome is a balanced mix of accuracy and training speed.

Engineering relevant features

Your model’s performance often hinges on how you represent data. That’s why feature engineering is crucial. This process includes:

- Dimensionality reduction (like PCA)

- Picking key features or building domain-specific metrics

- Cleaning up missing or noisy inputs

By focusing on the most informative features, you decrease your model’s search space. This ensures faster learning and better results. Feature engineering can also cut resource demands. Fewer features mean fewer computations.

Utilizing transfer learning

Transfer learning lets you borrow knowledge from existing, pretrained networks. You don’t need to start from zero. You can refine the last layers on your specific dataset. This method saves time, especially if your dataset is small.

It’s also more robust than training from scratch. The “teacher” network has already learned general patterns. You only have to adapt them to your new task. This tactic shines in niche domains like medical imaging, where data might be scarce.

Exploring Neural Architecture Search (NAS)

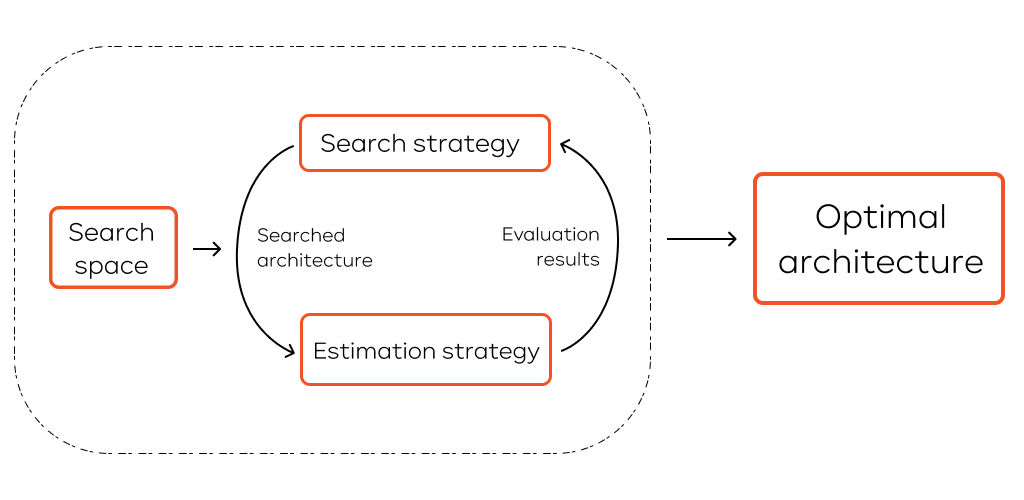

Neural Architecture Search, or NAS, finds optimal network designs without manual trial and error. It automates architecture decisions. These decisions can include which layer types to use and how to connect them.

A well-tuned NAS can merge pruning, quantization techniques, and distillation from the outset. This holistic approach leads to networks that are both accurate and computationally light. Although NAS may need heavy computing resources, it often pays off. You end up with a network tailored to your needs.

_______________________________________________________________________

Unsure where to start AI model optimization?

Use IT-Magic’s expert guidance and support. We’ll show you how AI optimization techniques can save time and money in your AI projects.

_______________________________________________________________________

Key techniques for AI model optimization

Now, let’s dive deeper into proven AI model optimization techniques. Each tactic targets a different area of efficiency. You can mix them to get the best results.

Pruning

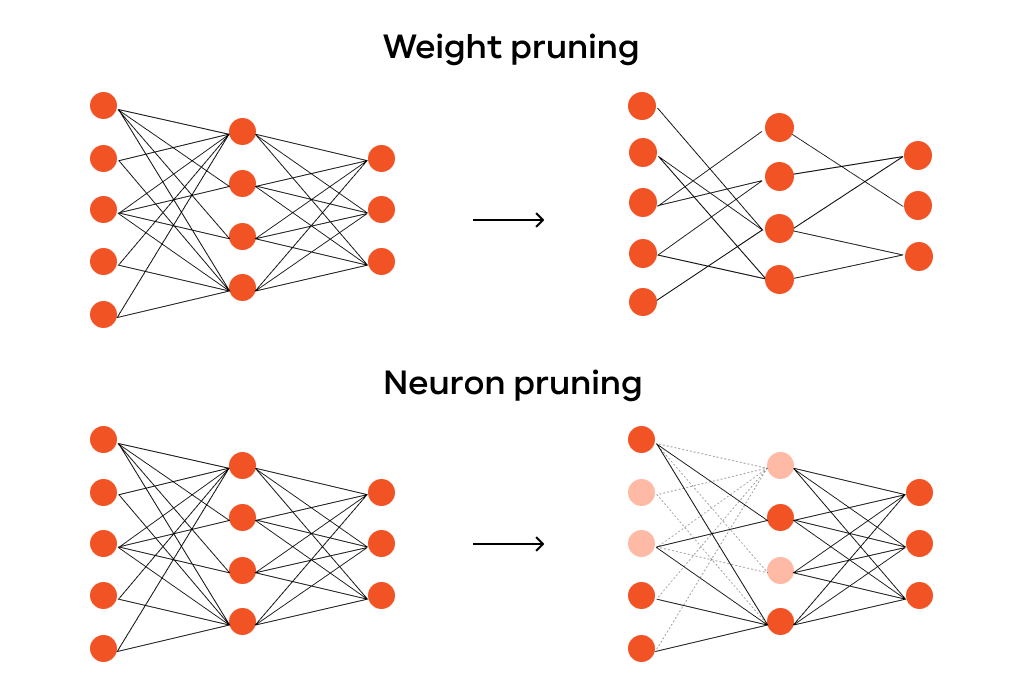

Model pruning removes weights or neurons that add little value. There are two main types:

- Weight pruning: Zero out small weights below a certain threshold.

- Neuron pruning (or channel pruning): Drop entire neurons or filters that barely help performance.

Many labs and institutes, including MIT CSAIL, have shown that pruning can shrink a model by half (or more) with little accuracy loss.

So, what is model pruning in plain terms? It’s about removing clutter so your model runs faster. By adopting a pruning model, you cut hidden complexities and improve real-time results.

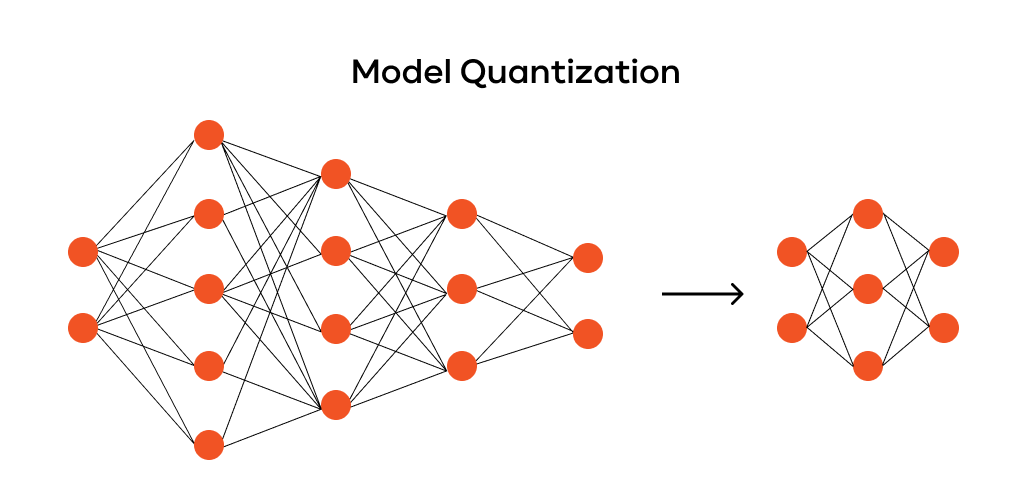

Quantization

Model quantization trims precision levels in a network’s parameters. For example, you may switch 32-bit floats to 16-bit or 8-bit integers. This simple tweak lowers memory use and speeds up calculations. There are a few quantization techniques:

- Post-training quantization – reducing model size and computational needs after training. This technique doesn’t require retraining the model from scratch.

- Quantization-aware training – preparing a model for efficient deployment in an environment with limited resources. It simulates quantization during the training process.

Both methods seek to keep accuracy high while still letting you leverage the memory and speed gains. AI model quantization has proven vital in edge scenarios. By shrinking the model, you can run it on lower-power hardware.

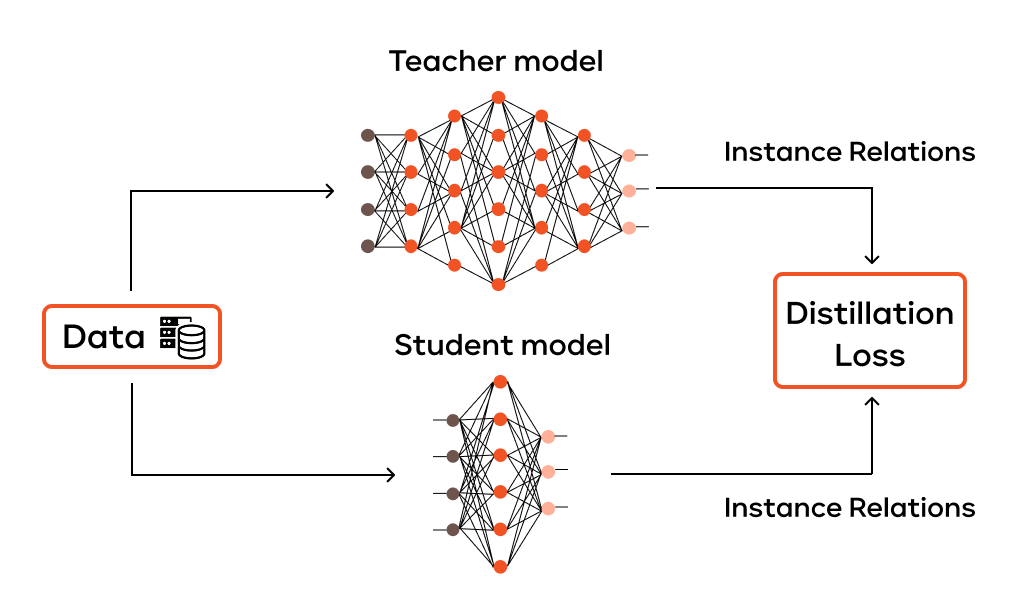

Knowledge distillation

Knowledge distillation involves a larger “teacher” model and a smaller “student” model. The student learns from the teacher’s outputs, not just from labeled data. This approach transfers insights. Often, the result is a lighter model with similar performance.

Distillation also helps the student model capture the teacher’s nuanced decision boundaries. This is handy in tasks like image classification or text analysis. In many cases, knowledge distillation can yield a robust model that’s easier to run in production.

Hyperparameter tuning

Hyperparameter tuning, discussed earlier, is crucial enough to mention again. Even small shifts in learning rate, dropout rate, or momentum can change model quality. Automated tools like Ray Tune and Optuna streamline these experiments. They gather metrics, compare runs, and pick the best hyperparameter set. This cyclical process saves weeks of guesswork for big projects.

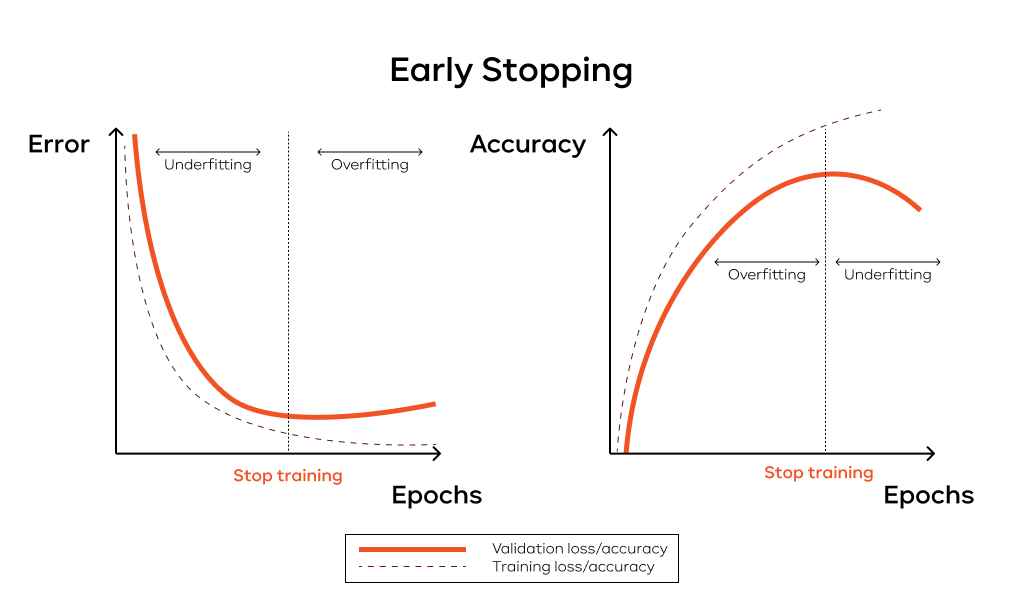

Early stopping

Early stopping is straightforward but powerful. You stop training when validation accuracy or loss stops improving. This prevents overfitting and curbs resource spending. Early stopping is important if your training loop is expensive. It also helps you avoid letting your model “memorize” the training data, which harms real-world performance.

Increased speed and efficiency

When these methods work together, your AI solution runs faster with less lag. This speed is huge for real-time tasks. It also helps if you deploy many concurrent sessions, such as in e-commerce or large social networks. A smaller model that’s been pruned or quantized can handle high volumes without slowdowns.

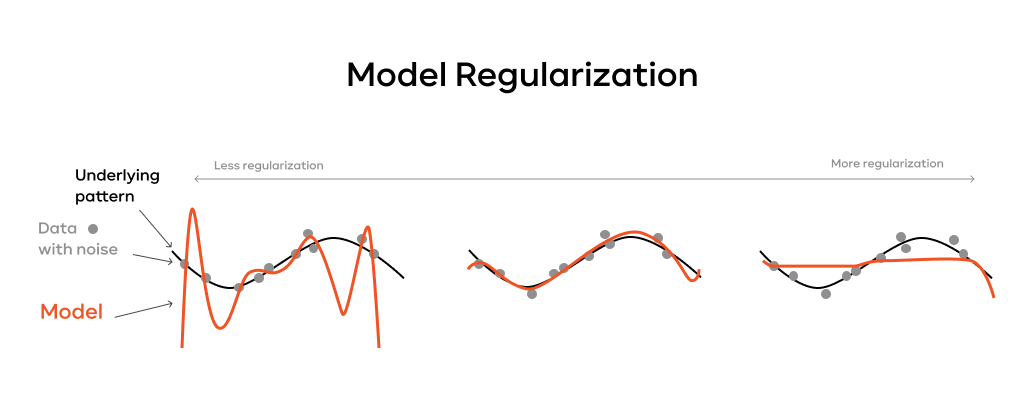

Improved generalization

Lean models often avoid the trap of memorizing noise in your data. Techniques like regularization, pruning, and quantization can help your AI learn only what matters. That means your AI system can adapt better to unseen inputs. It’s a safety net against overfitting.

Cost reduction

If your model is too large, you might need more GPUs or specialized hardware to run it. By optimizing your architecture, you can achieve the same results with fewer machines. That lowers your bills for electricity, cooling, and cloud instance fees. These savings matter even more for organizations that process millions of queries a day.

Scalability

An efficient model scales more easily to meet extra demand. When usage spikes, you won’t need to double your servers just to keep up. Pruned or quantized models require fewer resources, so you can maintain stable performance with less overhead. That stability makes life easier for DevOps teams as well.

Examples of industries benefiting from optimized AI models

Artificial intelligence is now successfully used in every industry. Each of them can take advantage of AI model optimization. Let’s review a few examples of sectors that gain from optimizing their AI:

Retail and e-commerce: Lean recommendation engines give instant product suggestions. That boosts conversions and enhances the user experience.

Autonomous vehicles: Self-driving cars need immediate decisions. Optimized object detection and route-planning models help reduce collisions.

Agriculture: Drones use smaller neural networks to detect crop issues in real time. Farmers can respond faster to pests or diseases.

Smart cities: Optimized networks process traffic camera feeds, easing congestion. They can also manage security tasks with fewer computational demands.

Energy: AI forecasts demand, manages renewable energy, and advances smart grids. Optimized models ensure efficient energy distribution and operations.

Entertainment and media: Streaming platforms need AI for content recommendation and audience analysis. Optimized models can enhance user experience and engagement.

To see how artificial intelligence helps and how you can select the right platform, read our Best AI Cloud Platforms article.

Challenges in model optimization

AI model optimization can be tricky. It involves trade-offs, resource limits, and ongoing changes in data patterns. But recognizing these challenges upfront helps you plan better. Let’s look into each of them in more detail.

Trade-offs between model complexity and performance

Large models offer more depth for representation. Yet, they can be slow or power-hungry. Sometimes, a complex architecture is worth it, especially for tasks like language generation. Other times, a trimmed-down design can meet your key goals without excess overhead.

Teams often compare multiple architectures side by side. This testing helps them find the sweet spot between speed, memory, and accuracy. Each use case is different. The best approach merges moderate complexity with strong model pruning strategies.

The difficulty of choosing the right optimization techniques

Model pruning and quantization, as well as knowledge distillation, all have unique pros and cons. If you run on embedded devices, quantization is often a must. For large language models, knowledge distillation might be more relevant. With so many options, it’s easy to feel overwhelmed.

You can tackle this by analyzing your constraints and performance goals. Then, experiment with a few methods in smaller tests. Specialized teams or consultants can help you pick the right path.

Quality and accessibility of data

Even the best architecture can fail due to poor data. If your dataset is incomplete or biased, optimization can only do so much. You still risk flawed outputs. The solution is to refine your data pipeline:

- Clean missing entries

- Standardize features

- Re-check labeling quality

Optimizing the model itself won’t fix data problems. Good data must be your priority.

Preventing overfitting and ensuring generalization

Techniques like early stopping and dropout can reduce overfitting. Yet, extreme model pruning or quantization may also degrade performance if you go too far. A balanced strategy looks at training metrics, validations, and test data results. Remember to check your final model on real-world inputs. That’s how you confirm it generalizes well.

Availability of computational resources

Cutting-edge methods like Neural Architecture Search (NAS) can speed up your quest for perfect networks. But they often need massive computing power. Small companies might lack these resources. Still, you can try simpler steps first. For instance, standard hyperparameter tuning and mild pruning can deliver solid gains with minimal hardware.

On the cloud side, you can rent computing time on demand. Keep a close watch on your resource usage to avoid big bills. If done wisely, you get the best of both worlds: strong computing with minimal overhead.

Adapting to evolving data

Real-world data changes. A weather prediction model might need to handle sudden shifts. A recommendation engine might see user interests drift. In these cases, continuous learning can keep your model fresh. Leaner models, in particular, are simpler to retrain. This loop helps you keep pace with new events or demands.

Seamless integration with current systems

Deploying an optimized AI model shouldn’t disrupt your entire operation. But sometimes, older frameworks or legacy code can cause conflicts. Hence, plan your deployment pipeline well. Make sure it connects with your data ingestion points, monitoring tools, and live services.

You might wonder who can advise you on real-world AI systems. That’s where skilled AI consultants step in.

Future of AI model optimization

As artificial intelligence evolves, so do its optimization methods. The coming years will likely bring fresh ideas and frameworks, making optimization AI more accessible.

Emerging technologies

Researchers are pushing the envelope with advanced learning methods and quantum computing. Quantum algorithms may someday shrink training times or improve architecture search. Also, new forms of regularization and specialized hardware keep popping up. These breakthroughs may reshape how we approach AI model optimization.

Automated optimization

Tools that automate hyperparameter searches or model pruning are on the rise. AutoML platforms streamline tasks that once needed an expert. This trend will help more people build effective AI without deep knowledge of each step. Automated approaches also reduce the trial-and-error behind manual optimization.

Ecosystem changes

Frameworks like TensorFlow, PyTorch, and JAX continue to add new performance options. Third-party libraries—such as TensorRT or ONNX Runtime—focus on speed. They also enable cross-platform optimization. Expect further integration with cloud platforms that offer prebuilt routines for AI tasks. This synergy helps companies design, optimize, and deploy models at scale.

To learn more about picking a strong foundation for your AI work, see our guide on AI as a Service (AIaaS).

Conclusion

AI model optimization is now a core practice in building robust AI solutions. It cuts waste by pruning unneeded parameters, converting to lower-precision formats, and fine-tuning hyperparameters. These steps boost performance without draining your budget. From e-commerce to manufacturing, optimized models excel when responding to shifting data.

Using AI model optimization techniques, you can ensure your AI stays relevant, even if user demands or data trends pivot. Smaller, well-structured models also help you adapt faster. They let you train and deploy solutions in real time without huge costs.

If you want to improve your AI’s reliability and speed, now is the time to act.

Explore your optimization options with IT-Magic!

Make your solution fast, accurate, cost-efficient, and more sustainable. Let’s optimize your AI for today’s needs and tomorrow’s growth.

FAQ

How can model optimization influence the long-term sustainability of AI systems?

By trimming computational loads, you reduce energy use and cut costs. This makes AI more sustainable. With efficient models, you also adapt faster when data changes. That means less frequent large-scale retraining. Over time, you protect your AI from becoming obsolete.

What role does efficiency play in scaling AI models for large-scale applications?

When your application scales, optimized models remain responsive. They handle growth without a spike in hardware needs or lag. This approach keeps user experiences positive and budget demands in check. It also helps with tasks like real-time detection, where speed is vital.

How does the process of AI optimization affect data security and privacy concerns?

Well-optimized models can run on edge devices, meaning they rely less on transmitting raw data to the cloud. This local approach can bolster privacy since fewer sensitive details leave the user’s device. Still, you must follow privacy rules (like GDPR) when collecting or processing data. Proper encryption and secure data flows complete the picture.